Async programming in Rust lets you run code that doesn’t block the thread while it waits for something like a file read, a network call, or a delay. You can pause part of your logic without freezing the whole system. Even though async functions look like they run top to bottom, that’s not how they work. The compiler rewrites them into state machines that pause at certain points and pick up later. That’s what makes .await work the way it does. If you know what’s actually happening behind the scenes, it’s easier to write code that behaves the way you expect.

What Async Functions Really Are

Rust Async functions don’t behave like normal functions. At a glance, they look like they run top to bottom just like any other block of code, but what’s actually happening is very different. Calling one doesn’t start it. It builds a value that knows what to do when it is run, but it holds off until something drives it forward. This is part of what makes async programming in Rust structured the way it is. Async functions don’t do the work themselves. They build something that acts like a recipe for the work, and that recipe only runs when the runtime polls it. That’s why you often need something like Tokio or async-std to actually see results.

Async Functions Return Futures

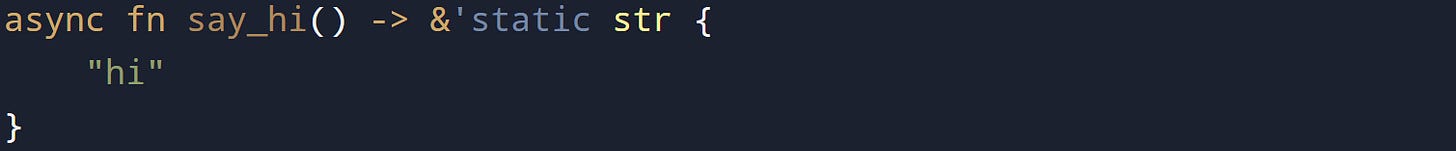

When you write an async function, the compiler rewrites it to return a type that implements the Future trait. Here’s what that looks like in practice:

Calling say_hi() won’t print anything or even return "hi". What you get back is a future that needs to be .awaited before it does anything:

The call to say_hi() builds the future. The .await pauses greet() until that future finishes. Until then, nothing has run yet. All of this stays paused until something starts polling greet().

A future in Rust is anything that implements this trait:

It has one job. It gets polled, and it answers either “not yet” or “done.” That’s it.

To show what’s going on, you can build one by hand like this:

This future is boring, but it proves the point. It’s ready the moment it’s asked. Most real futures will return Poll::Pending at least once before they complete.

The Future Trait and Polling

Rust’s async model uses polling to check futures and move them forward. That polling happens inside an executor, which usually runs on one thread but can use more if needed. This means someone, usually a runtime, has to repeatedly ask your future if it’s finished yet. If not, it gives up for now and waits to be told when to check again.

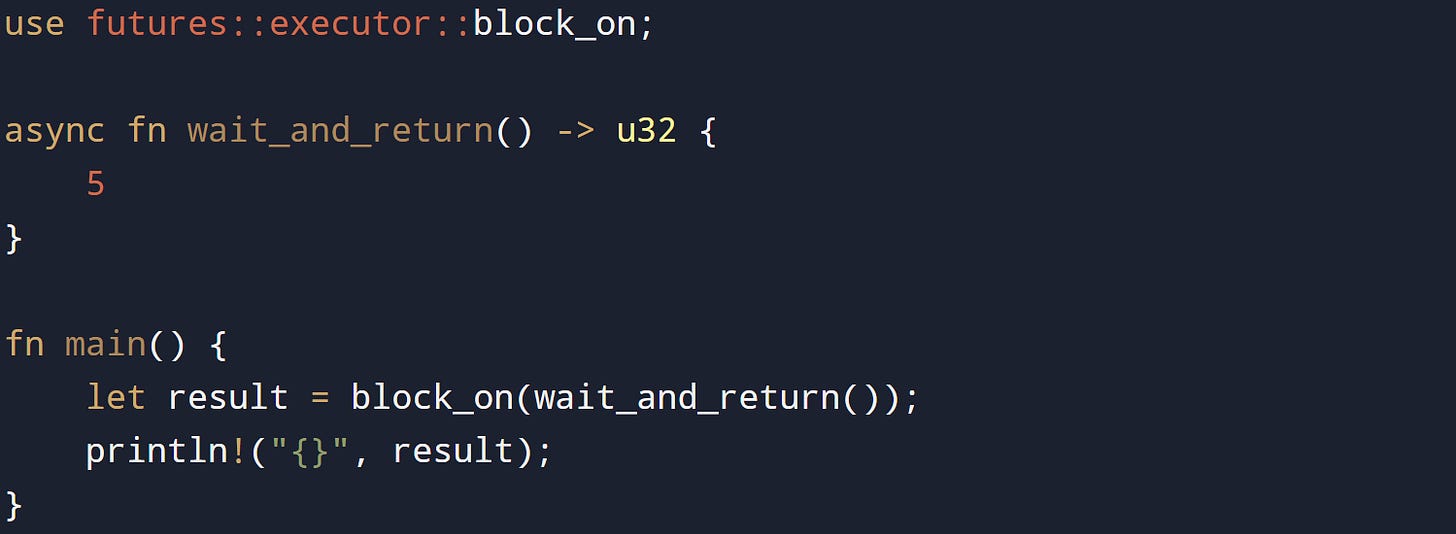

Here’s what that looks like at the caller level:

The block_on function runs the future by polling it until it says it’s ready. Behind the scenes, that polling might pause and return many times, but block_on hides that from you.

It’s the runtime’s job to figure out when to poll again. That’s where the Context and Waker come in. When a future isn’t ready, it gives the runtime a way to call back later. That callback is part of the Context and gets passed into the poll function. Most async code never touches this directly, but it’s part of why the model works.

How the Compiler Transforms Async Code

Writing async code in Rust feels like writing synchronous code, but what the compiler builds out of it isn’t anything like a regular function. Async blocks and functions don’t run line by line the way they look. Instead, the compiler turns them into a kind of state machine that keeps track of what to do next. That transformation is what makes .await work, and it’s what lets async code yield control until it's ready to move forward.

Each time a function hits an .await, it’s paused, and the values at that point are stored in a custom type the compiler generates. The rest of the function gets skipped until the paused part wakes back up and the future is polled again.

State Machines And Await Points

Every async function gets rewritten as a new type. That type includes fields for all local variables, fields for the results of any .await calls, and a field that tracks which part of the function to run next. This is done by creating something like a manual state machine.

Let’s say you write this:

That code feels like a normal two-step calculation, but the compiler breaks it apart. The function gets turned into something that remembers where it left off. If the call to add_async(x, y) isn’t ready yet, the function won’t go any further. It’ll save the current values and exit. Later, when the future completes, it picks up where it paused.

Internally, the rewritten future would keep track of a state, something like this:

That state decides which part of the logic to run when the function is polled. The struct that holds the future would have fields for the input values and any variables that had already been computed. The code that handles polling would read the state, jump into the matching block, and update the state when moving forward.

The jump between states isn’t done with a real jump instruction. It’s done with a match expression that gets evaluated each time the future is polled. This is what makes async work without threads. The current step is paused, stored, and resumed later.

Lowered Code View

You don’t have to guess what the compiler builds. You can actually see what it turns your async function into by asking it to dump the lowered code. Using the nightly compiler and the -Zunpretty=hir or -Zdump-mir flags will give you a look at the transformed output. That output shows how the compiler rewrites every async fn into a concrete type that implements Future, stores each variable in a field, and uses a match on an internal state to drive execution.

To get an idea of what this looks like in a controlled example, try running this through Compiler Explorer with Rust’s MIR output view:

The compiler still creates a struct and match-based poll method even if there are no .await calls. That’s because async functions always produce futures, and futures always need a poll method.

The interesting parts come in when .await is involved. That’s when the compiler builds a state machine with pause points and stores in-progress computations.

What .await Does During Execution

The .await keyword looks like a pause. What it actually does is trigger the polling of another future. If that future isn’t done, .await tells the outer future to pause too.

Behind the scenes, .await is more like a call to a helper method that checks the status of the inner future. If the result is Poll::Pending, then the current future also returns Poll::Pending. If the inner one is ready, the outer one moves forward. This works because the compiler rewrites .await into code that does this polling check and moves to the next state if the future is ready.

Here’s a small example that shows this in a reduced form:

That function will pause twice, once at each .await. The compiler generates two different states: one for waiting on slow_double(a) and one for waiting on slow_double(b). It stores a, b, and the intermediate result between the two. The future for delayed_add returns Poll::Pending both times until both calls are ready.

This is why .await only works inside async functions. It expects to be inside a polling context that knows how to manage this control flow.

Pin And Self-Referential Structures

The compiler’s transformation produces a struct that stores every variable used across .await calls. In many cases, that includes futures returned by other functions. Those futures often hold references that point to other fields in the same struct. That creates a layout where part of the struct borrows another part of itself.

Normally, Rust wouldn’t allow that. Self-references are unsafe when a value can be moved, because moving it would break those internal references. To deal with this, the async machinery uses Pin.

Pin<&mut Self> guarantees the future’s storage location will never change again once it is pinned, so any internal borrows stay valid. That lets the compiler safely borrow one part of the future from another, which is needed to make .await work. The poll method always receives a pinned version of the future so those internal references stay valid. If you look back at the Future trait, you’ll see that the poll method takes self: Pin<&mut Self>. That’s the safeguard. The pin guarantees the memory layout stays stable.

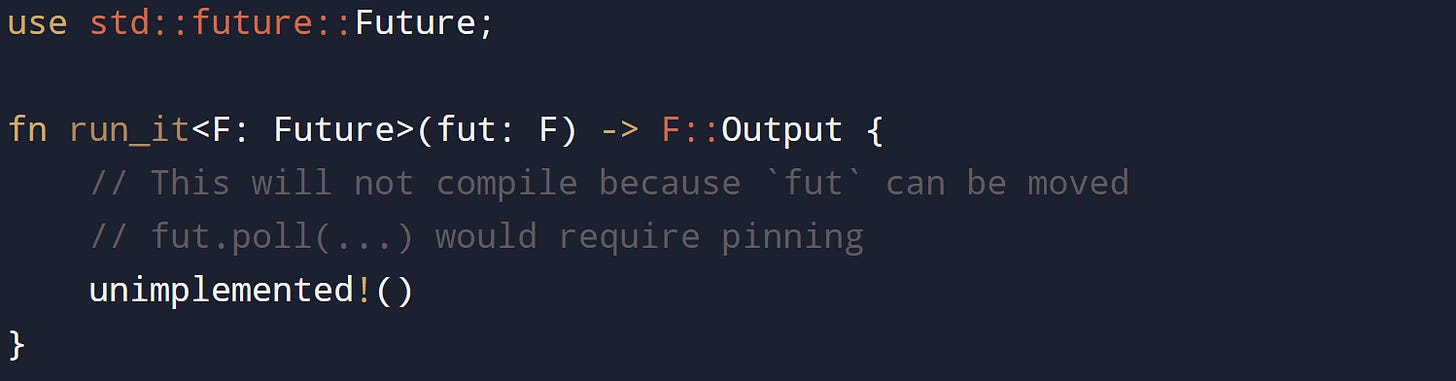

This is also why async functions can’t just be regular iterators or simple closures. They require a fixed structure that won’t be moved after it’s built. The pinning and state tracking are what keep all of this sound. Here’s a brief example of how pinning interacts with real futures. This doesn’t compile without pinning:

To fix that, you’d need to use Pin<Box<F>> or a similar pinned type and make sure it stays where it is. Async runtimes handle this for you, so most users don’t have to write it themselves. But it’s the reason everything built from an async function ends up needing pinning in the first place.

Conclusion

Async functions in Rust work by turning into futures that pause and resume through polling. The compiler builds a state machine that keeps track of progress and stores anything it needs to finish the rest later. Each .await marks a spot where the future stops and waits. None of this runs by itself. The future sits idle until something pushes it forward. The struct is free to move right up until an executor (or something like Box::pin) pins the future in place. After that pin step the value stays put, and that fixed location is what keeps any internal references safe. Async in Rust reads like simple logic, but under it all, it’s a careful system of stored state, polling, and safe memory layout.