Cleaning Up Dirty Data Using SQL

Fixing bad input, missing values, and weird formats without leaving your query

Data rarely enters a system in perfect shape. Typos, extra spaces, mismatched formatting, and missing entries show up constantly in real-world tables. These issues can cause reports to miscount, searches to miss valid matches, and users to lose trust in the results. Fixing it doesn’t always need third-party tools or manual cleanup. SQL already gives you the functions to fix most of this directly in your queries. You just need to know what to look for and how the tools work behind the scenes.

Fixing Spacing, Case, and Format Issues with Core Functions

Text that looks the same to a person can act completely different to a database. Extra spaces throw off matches. Different casing causes duplicates to appear separate. Symbols or odd punctuation get in the way of grouping. Let’s walk through the tools SQL gives you to clean up that kind of mess. All of these work at query time, without needing stored procedures or external tools.

How TRIM Works

Trailing and leading spaces aren’t always visible but can quietly break your queries. They show up from copy-pasted inputs, CSVs, and even web forms that collect user data. Let’s say someone types a name with three spaces after it. Visually, nothing seems wrong. But if you try to JOIN on that field or filter with WHERE name = 'Paula', the row might be skipped.

The TRIM() function clears out those extra spaces from both ends. It doesn’t change the column’s actual value unless you run an UPDATE, but it does return a clean result in your query.

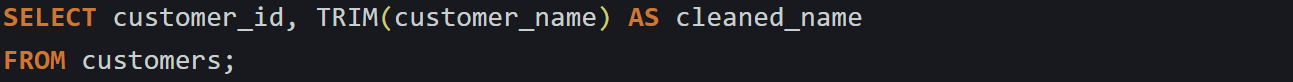

Here’s a quick example using customer names:

This works by scanning from the start of the string and stopping when it hits a non-space character. Then it does the same in reverse from the end. It copies only what’s between those points into the final result. That’s why it only works on the outside, not the middle.

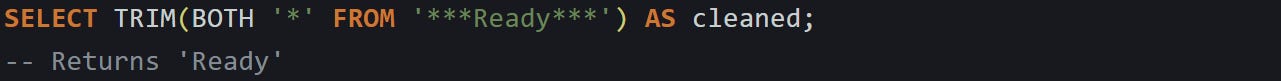

To remove things other than spaces, like asterisks or dashes, you can pass a second argument:

Keep in mind, it still only looks at the edges. It doesn’t scan the full string for every asterisk, just trims from the outside in. If you need to clean inside the string, that’s where REPLACE comes in.

Cleaning Up with REPLACE

If your data has repeated characters, wrong separators, or extra symbols scattered inside, REPLACE can help fix that. It looks for exact matches of a search string and replaces them with whatever you specify. This happens inline in the result.

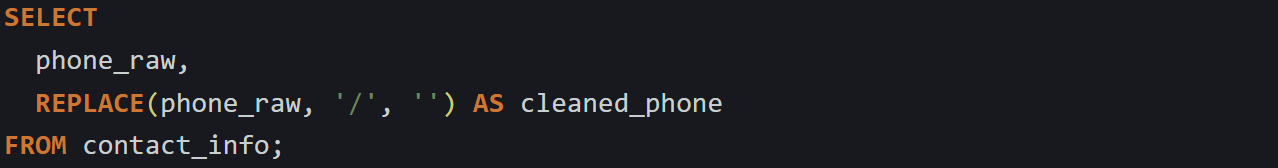

Let’s take a phone number as an example. If some of your rows store numbers with slashes, and others use dashes or nothing at all, the formats won’t match. You can clean them with:

The function walks through each row’s phone_raw value, copying characters to a new buffer. When it finds a match, it skips over the search string and adds the replacement instead. In this case, that’s an empty string, so the slashes vanish.

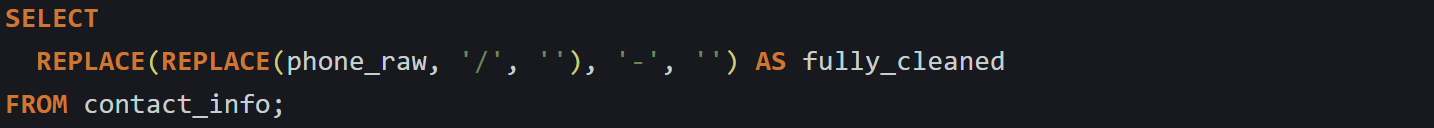

If you need to replace more than one character type, just nest the calls:

You can use this for more than just phone numbers. Log files, product descriptions, or scraped web content often have weird characters that don’t belong. Replacing or stripping them before analysis keeps things cleaner downstream.

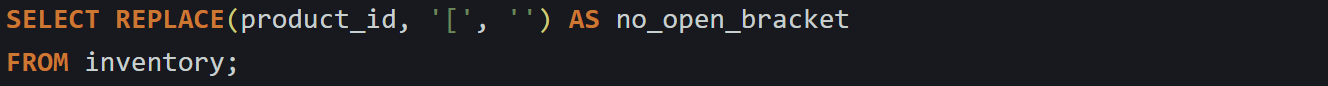

Say you have product IDs with brackets in some rows:

This removes the opening bracket, but if you also want to get rid of the closing one, you can nest again:

These work well for repeated cleanup without writing extra logic. Just keep in mind that it replaces exact text only. It won’t match patterns or character ranges. If your problem is more complex than a simple swap, regex functions might be a better fit, but they’re not always supported the same way across databases.

Adjusting Case for Consistency

Different casing across rows can create silent duplicates. “Order A”, “order a”, and “ORDER A” might all refer to the same thing, but SQL treats them as separate unless the collation is case-insensitive. When you need uniformity, casing functions like LOWER() and UPPER() help smooth things out.

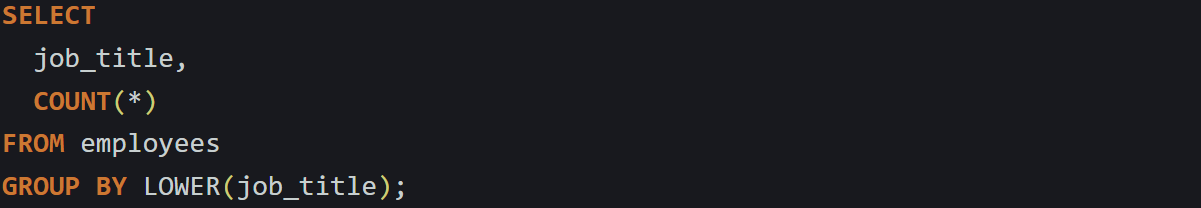

For example, say your users entered job titles into a free-form input field. Some wrote “Engineer”, some typed “engineer”, and others used “ENGINEER”. If you’re trying to group or count them, these won’t match.

This turns every input into lowercase first, then groups them. You could just as easily use UPPER() if that makes more sense for your use case.

These functions don’t rely on pattern scanning. They go character by character, mapping letters based on the character set and collation settings. Most databases handle ASCII consistently, but things like accents or language-specific characters might behave differently depending on those settings.

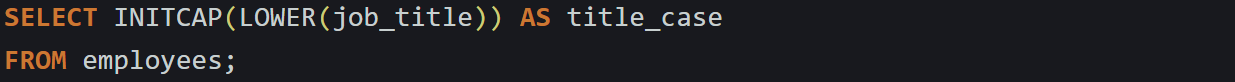

To make sure a display label stays readable, you can combine casing with INITCAP in some databases like Oracle or PostgreSQL:

This gives you a nice result like “Software Engineer” regardless of how it was originally typed. If your database doesn’t support INITCAP, you’ll need to do that kind of formatting outside SQL or write a custom function for it.

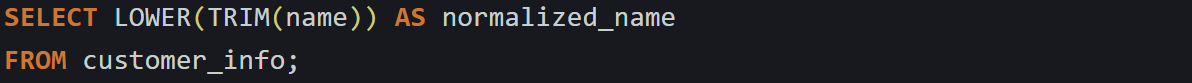

Sometimes it’s not just the casing that’s messy, but a mix of it with odd spacing. You can chain TRIM and LOWER together to clean both problems at once:

That combo strips off extra spaces and normalizes the casing, making it easier to run filters, joins, or comparisons later. You’re not rewriting the original data, just reshaping how the query sees it. For audits or temporary cleanup, that’s often enough.

Handling Missing Data, Defaults, and Inconsistencies

Not every row is broken in the same way. Some are missing values entirely. Others hold something, but it doesn’t line up with how the rest of the data looks. Maybe it’s a null. Maybe it’s just the wrong label or a placeholder like “n/a”. These mismatches slip past simple filters and quietly skew results. They don’t crash queries, but they can throw off counts, block matches, or make reports harder to trust. SQL has ways to catch those differences and shape the output to work more cleanly with the rest of your logic.

Catching Nulls with COALESCE and CASE

Nulls don’t behave like blank strings. They don’t equal anything, not even another null. That’s why comparisons using = won’t catch them. Trying to match a column against an empty string will skip nulls entirely, and string functions like LOWER() or REPLACE() will just return null back if the input is null.

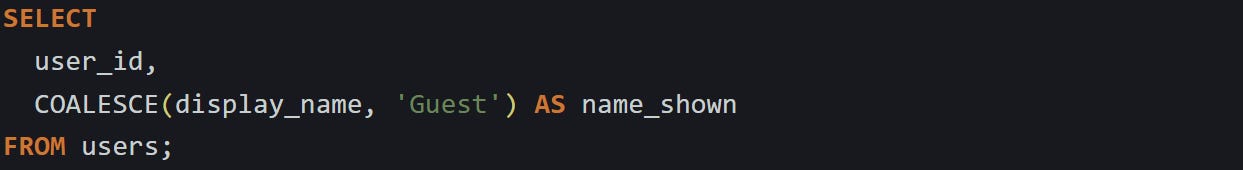

To fill in blanks or assign a fallback value, COALESCE helps. It’s a short function but works by going down a list. It checks each one in order and picks the first thing that isn’t null.

If display_name is null, the query shows "Guest" instead. If there’s a value, it uses that. It doesn’t care if the value is an empty string, just whether it’s null. You can pass more than two values if you need a longer fallback list. This helps when you’ve got columns that were added over time, or optional inputs where not everyone filled in the same thing. You can set a default directly in the query without touching the table schema.

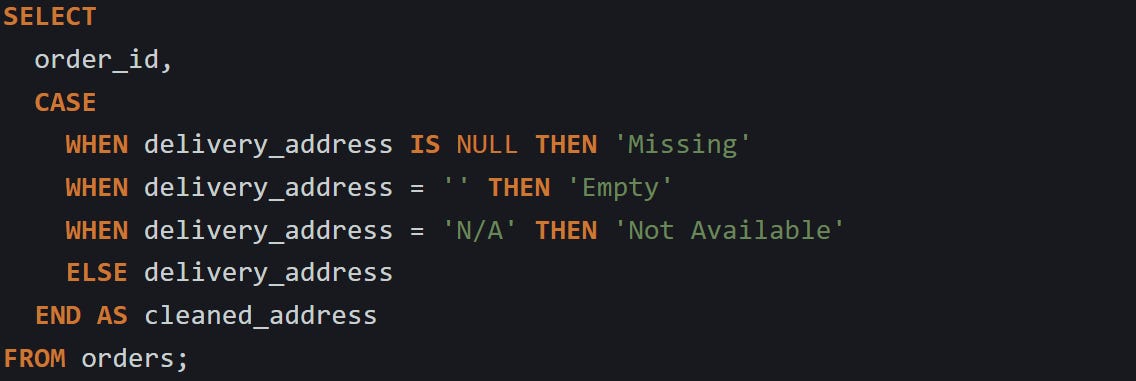

For more detailed cases, you can use CASE to spell out what you want to happen. It gives you more control over how to handle empty strings, placeholders like "n/a", or values that look wrong but aren’t actually null.

This breaks the values into categories. The first match wins, and the rest get ignored. The engine runs this for each row and builds a result column with cleaned-up values. You can also nest CASE inside COALESCE if you want to combine them.

Both COALESCE and CASE run per row and don’t affect the source column.

Fixing Inconsistencies Across Rows

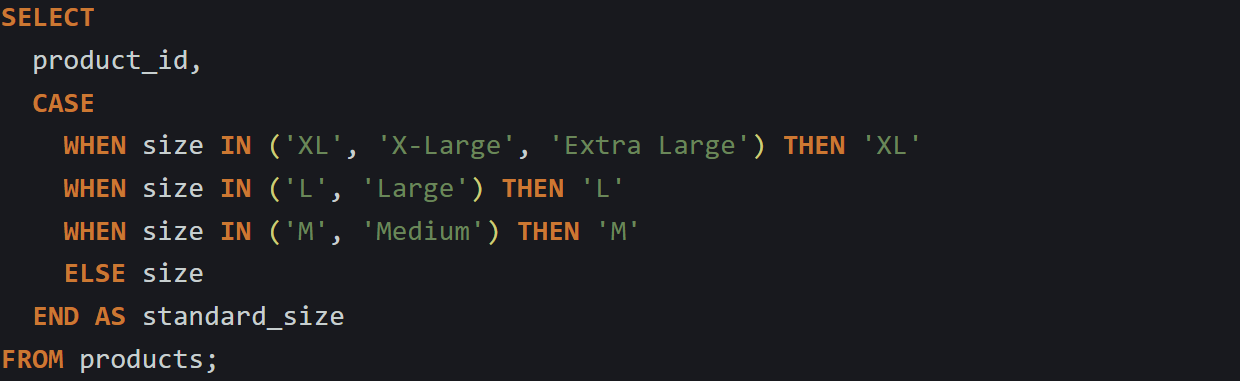

Even when a field has a value, that doesn’t mean it’s consistent. One product might be listed as “XL”, another as “Extra Large”, and another as “X-Large”. These don’t count as duplicates, but logically they are the same thing. Unless you clean them up, filters and groupings will treat them as separate.

You can’t fix this with TRIM or LOWER() alone. This kind of mismatch calls for mapping known variations into a single value.

This gives you a consistent version in the standard_size column. It works even if the original size values are all over the place. You can filter or group by the result without worrying about all the different ways a person typed it.

For longer mapping logic, it helps to put the cleaned values into a lookup table and join against it. But when you’re doing light cleanup inline or running a quick fix, CASE keeps it all in one place.

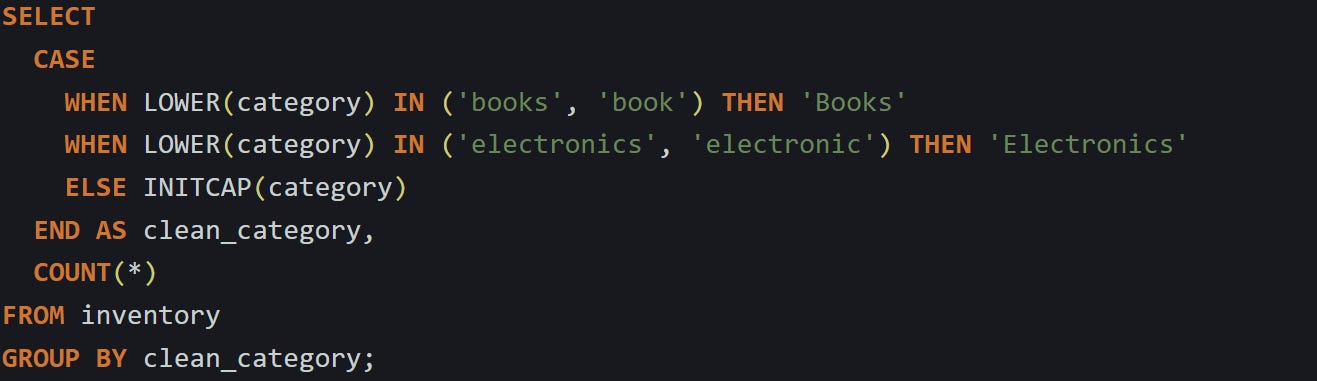

Another way this shows up is in categories. Maybe a field called category has both “Books” and “books”, or “electronics” and “Electronics”. If you want to group or count those, normalizing the format first makes a difference.

This makes things easier to read and avoids split counts. The logic can be as simple or as layered as you need, and it always runs per row in the result set.

Finding and Fixing Bad Patterns

Bad patterns hide in email addresses, phone numbers, usernames, and anything that should follow a format. These don’t always cause errors, but they can throw off reports, bounce emails, or fail API calls. SQL lets you catch many of these using LIKE or more advanced expressions like REGEXP.

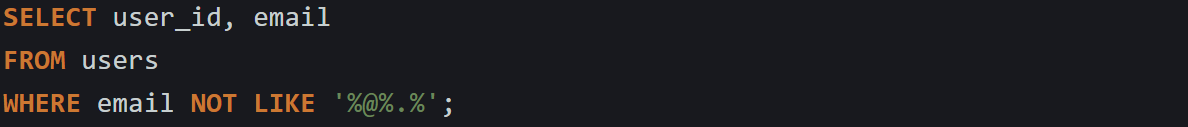

For example, if you’re storing user email addresses, you can check if they contain both an @ sign and a period.

That filters out anything missing the basic structure. It won’t catch every broken email, but it gets rid of the ones that are clearly bad. You can tweak the pattern to match your format more closely.

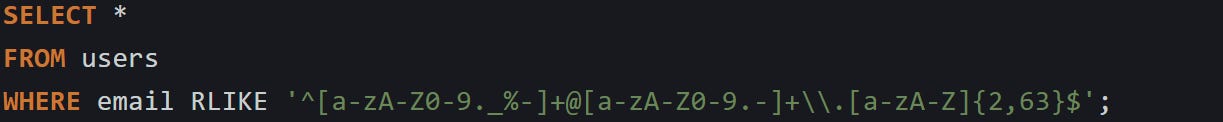

To go deeper, some databases support pattern matching functions like REGEXP_LIKE() or RLIKE, which give you more control over the structure. For example, in MySQL:

This checks for a more complete email pattern. The syntax depends on the database engine, and these functions are usually heavier to run. But when you need them, they help catch rows that need to be fixed or flagged.

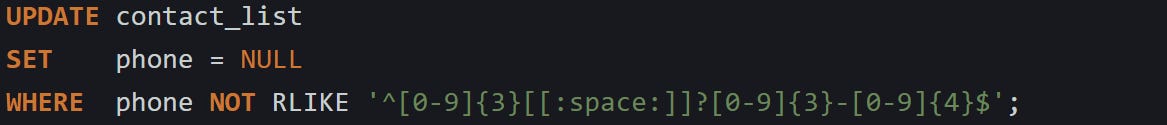

After you’ve spotted the bad data, you can filter it out, update it, or assign a placeholder. Here’s how to rewrite invalid phone numbers as nulls:

This format check only works if your data is already somewhat structured. For raw inputs, you might need to strip out non-numeric characters first, then look at length or prefix.

Bad patterns aren’t just about user input either. They can come from imports, legacy systems, or copy-pasted values. Cleaning them with filters and formatting logic keeps the noise out of reports and dashboards and saves time down the road.

Conclusion

Most of what makes SQL good for cleaning data comes down to how it processes each row. Every time you use a function like TRIM, REPLACE, COALESCE, or CASE, the engine evaluates the input one step at a time, rewrites the result in memory, and returns a cleaned-up version in your query. None of this changes the original data unless you ask it to. That makes it easier to experiment, catch problems early, and keep your results consistent without needing extra tools or custom scripts.