Applications that talk to a database rely on connection pools to keep sessions open without constantly creating new ones. Reusing connections saves a lot of time and system overhead compared to opening and closing them repeatedly. The most common pool bundled with Spring Boot today is HikariCP, known for being fast and dependable. Problems arise, though, when a connection is borrowed and never returned. This creates what’s called a connection leak, which slowly drains the pool until nothing is left for new requests. Spring Boot works with HikariCP to catch these leaks early and point them out before they cause failures in production.

How Connection Leak Detection Works in HikariCP

HikariCP keeps a very close watch on every connection that leaves the pool. The idea sounds simple but has some moving parts that work together in the background. A connection doesn’t vanish into the application once it’s borrowed; it’s wrapped, timed, and monitored so the pool can tell whether it ever makes its way back. If it lingers too long, HikariCP raises an alert through logging, giving developers a clear pointer to where the problem started.

What Happens During Checkout

When an application asks for a connection, HikariCP avoids handing out the raw connection object directly. Instead, it provides a proxy wrapper. That wrapper serves as a watchful layer, intercepting calls such as close(). By monitoring those calls, the pool knows when the connection has safely returned. At checkout time, HikariCP records the exact moment the connection leaves the pool. It links that timestamp to the proxy connection object so it can measure how long the connection stays outside. This design is the foundation of leak detection.

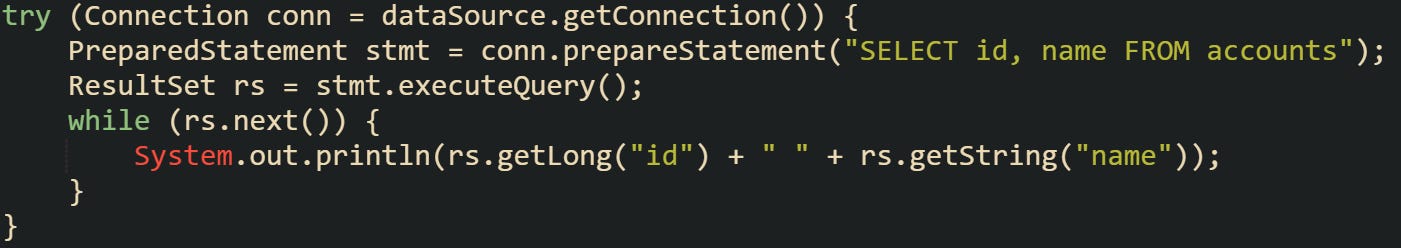

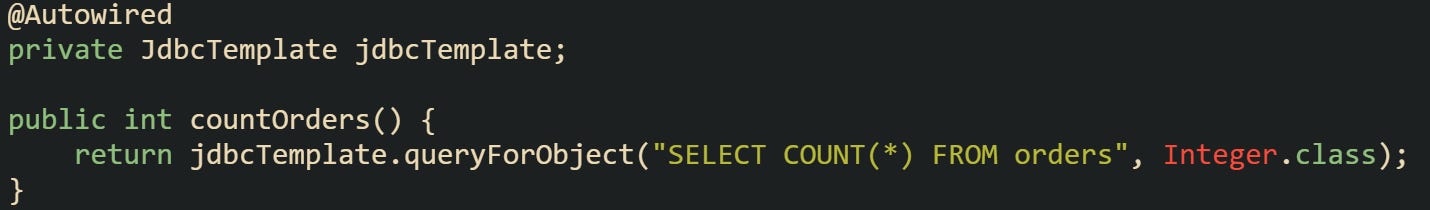

Let’s look at typical example that shows how a connection is borrowed in a safe way:

From the application’s perspective this feels like normal JDBC work. Behind the scenes, dataSource.getConnection() has returned a proxy, not the raw JDBC object. HikariCP watches that proxy, records the timestamp, and expects conn.close() to be called when the block finishes.

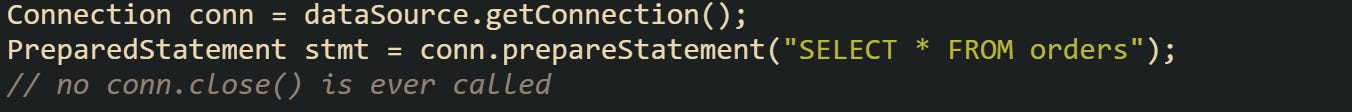

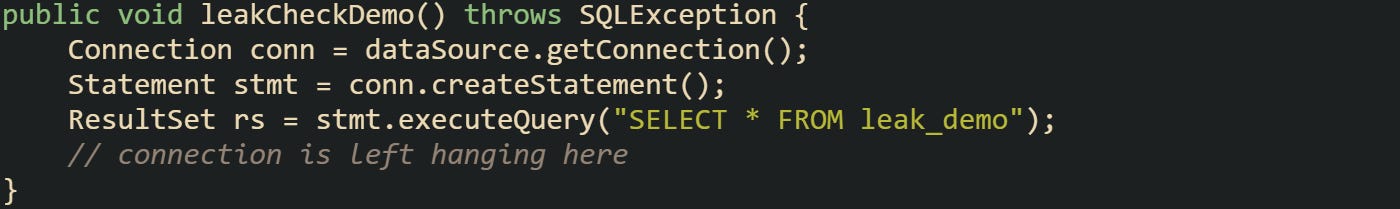

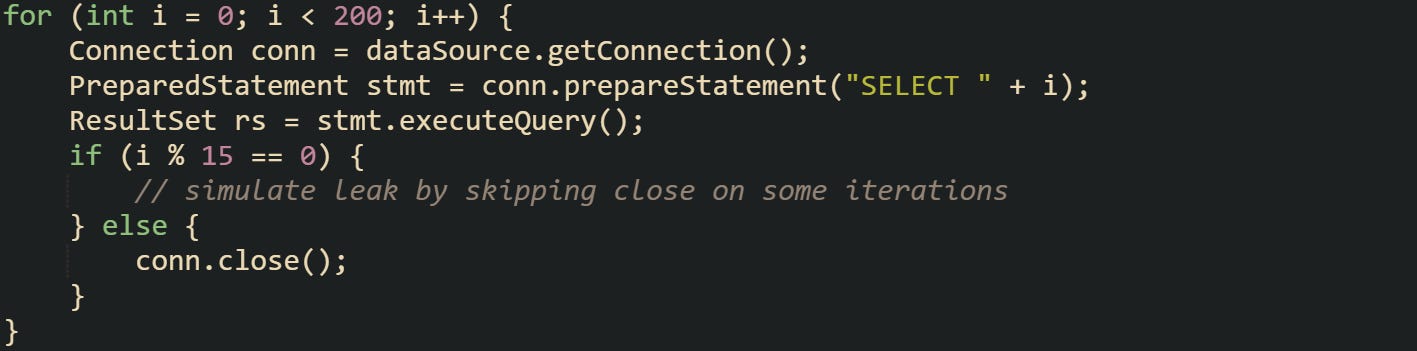

Here’s a less safe example that leaves the connection open:

That connection remains stuck outside the pool. HikariCP detects it once the configured leak detection threshold has been crossed, and a warning is issued.

The Leak Detection Timer

At the center of leak detection is the leakDetectionThreshold property. This value, expressed in milliseconds, defines how long a borrowed connection can stay open without being closed. If that window is exceeded, the pool marks the connection as suspicious and logs a warning.

In Spring Boot, you can set this property in application.properties:

With this configuration, any connection held longer than fifteen seconds without being closed will trigger detection.

This threshold doesn’t cancel or forcibly take back the connection. It works like a watchdog. The connection is still in the hands of the application, but the pool assumes that it should have been returned already and brings attention to it through logging.

Internally, HikariCP starts a timer when a connection is borrowed. A background checker compares the current time with the recorded checkout moment. If the difference goes beyond the threshold, the pool marks the connection as leaked. This ongoing monitoring prevents leaks from draining the pool silently.

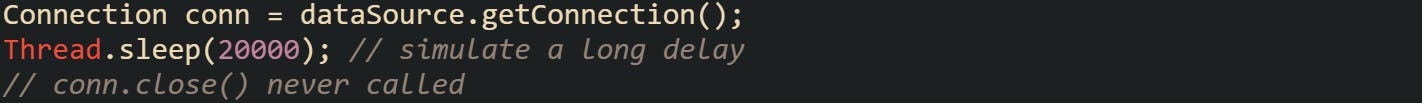

Here’s an example of code that will always cross the threshold:

With a threshold of fifteen seconds, this snippet guarantees a leak warning from HikariCP.

How to Spot and Stop Connection Leaks

Leak detection in HikariCP gives developers the signal that something has gone wrong, but reading the signal and acting on it is where the real fix happens. The previous section explained how HikariCP times each checkout and produces warnings when thresholds are passed. Now we will be taking a look at more practical debugging, focusing on how to read warnings in the logs, why leaks often appear, and what habits keep them from coming back.

Spotting Leaks with Logs

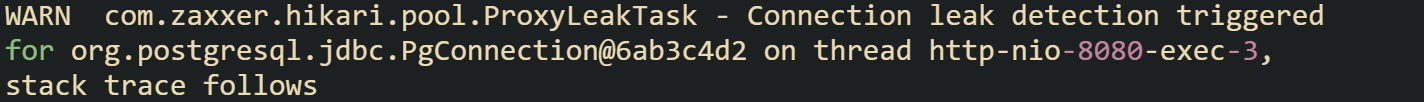

When a leak is detected, HikariCP logs detailed information that makes the problem traceable. The warning includes the proxy connection ID, the thread name that borrowed it, and a stack trace showing the call site where the connection first left the pool. The proxy ID itself is rarely useful, but the stack trace that follows tells the real story. Those first few lines usually point straight to the line of code where getConnection() was called, giving developers a clear starting point for debugging.

A warning looks like this:

During development, it’s common to shorten the leak detection threshold so leaks surface quickly and tie directly to recent changes. In production, the value is usually longer to avoid unnecessary noise from queries that take more time but are still valid.

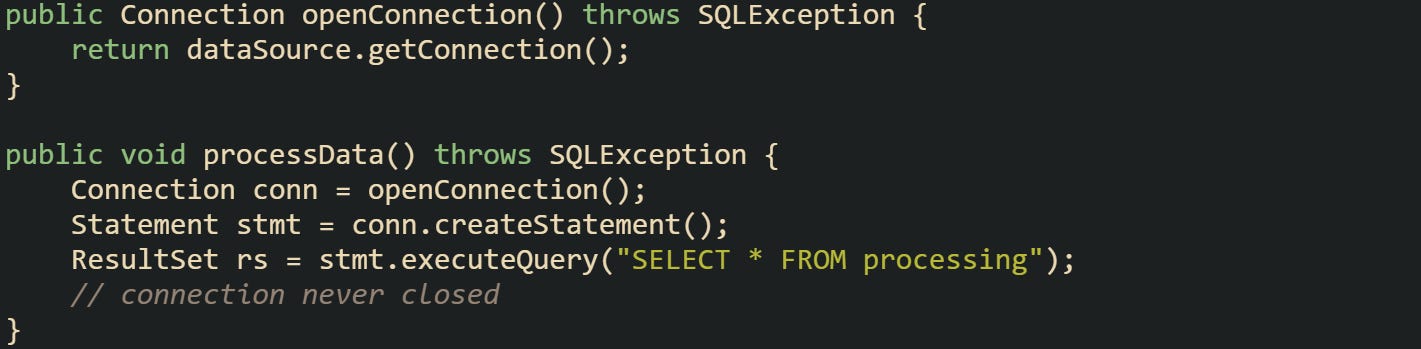

A good way to test this behavior is to write a small routine that deliberately forgets to close a connection, then study the log that appears:

Running this with a short threshold will immediately produce a warning. Reading the trace alongside the code helps you understand how HikariCP reports the problem and where to look for the fix.

In larger projects, these warnings can sometimes be buried in regular log output. Many teams redirect them to a dedicated log file during development so they can be reviewed in bulk. Pairing that with automated integration tests that run connection-heavy workloads makes leaks much easier to detect before they ever reach production.

Common Causes of Leaks

The actual mistakes behind leaks are often less dramatic than they sound. Most come from forgetting to close a connection under certain paths or handling it incorrectly across methods. We saw in the last section how a missing close() is enough to trigger a warning. Here we’re going to look at a few common variations of this.

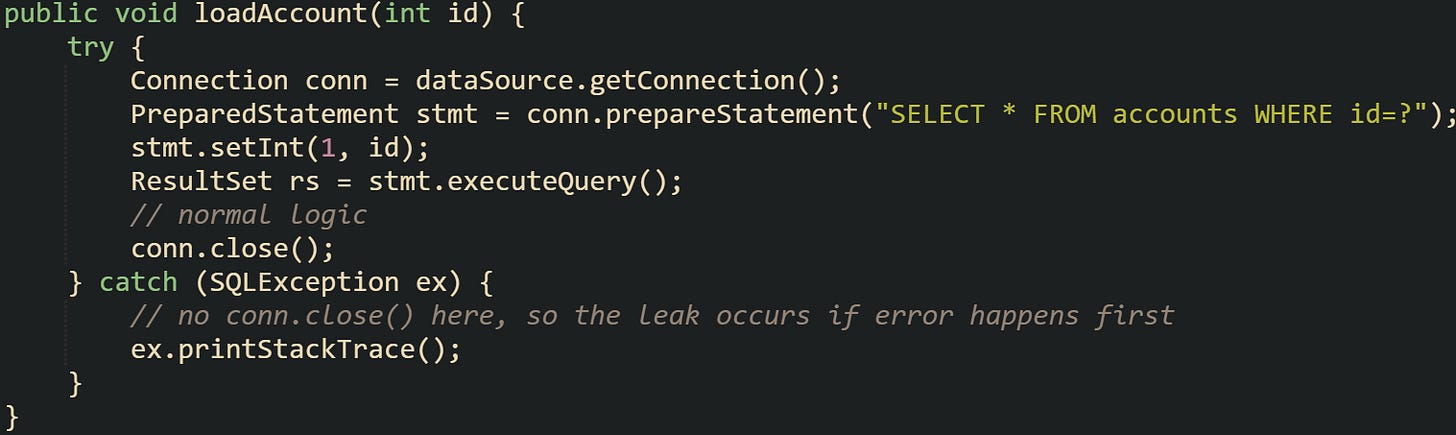

One classic case happens when exceptions skip over the close call:

A different and frequent source is pushing connection responsibility across multiple layers. One method borrows it, another uses it, and somewhere along the way the close call disappears:

These are not complex mistakes but they drain the pool quickly when traffic is high. Leaks also happen when developers hold connections open for long-running work, such as large reports or blocking loops. While not technically leaks in the sense of being “forgotten,” they have the same effect: the pool has fewer connections left to serve other requests.

Best Practices to Stop Leaks

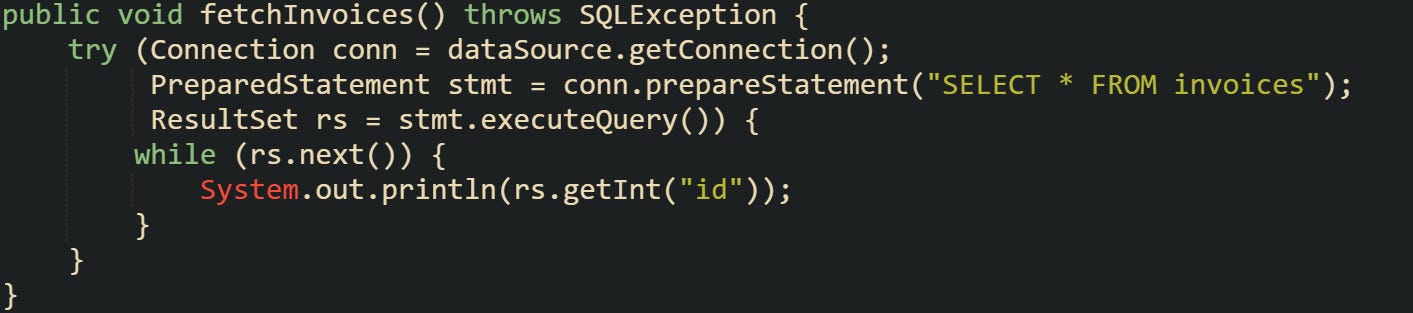

Preventing leaks is usually about putting habits in place that make it hard to forget returns. Try-with-resources is one of the most reliable safeguards, as it closes everything in the block even if exceptions are thrown.

This makes sure that the connection always finds its way back to the pool.

When Spring’s JdbcTemplate is in use, lifecycle handling is managed by the framework itself, which greatly reduces the chance of leaks.

The application code never interacts with the raw connection. The pool still does the checkout and return in the background, but developers don’t need to write closing logic themselves.

Transactions deserve attention too. A transaction that drags on for minutes holds a connection the entire time. Setting a timeout helps keep them from overstaying.

With a default like this, a transaction that crosses the limit is rolled back, and the connection is freed for others.

Putting Leak Detection Into Action

Turning leak detection on is only useful if the warnings are acted upon. Development and testing environments are the best places to tighten the threshold, sometimes to only a few seconds, so that leaks reveal themselves quickly. This forces attention on missing close calls before they ship. Stress testing can also help expose leaks that don’t show up during small runs. Borrowing many connections in a loop while intentionally leaving some unclosed will produce multiple warnings. Watching these warnings teaches you how HikariCP reports leaks under load.

This type of test can be run with a short threshold to see how fast warnings appear and how easy it is to track them through the logs.

In production, thresholds are set longer to avoid false warnings from queries that genuinely take time. But leak detection still serves as a safety net. When combined with normal monitoring tools such as Actuator metrics, it helps keep the pool healthy and prevents unseen leaks from slowly starving the application of connections.

Conclusion

HikariCP’s leak detection works by wrapping every borrowed connection, timing its life outside the pool, and producing warnings when a threshold is crossed. Those mechanics give developers a clear trace of where a leak began, which makes it possible to stop connections from slipping away silently. With Spring Boot’s integration, this safety net is easy to turn on and valuable both in development and in production, keeping database pools reliable under workloads.