Large API responses can put pressure on memory if everything is handled in a single block. By default, a HttpMessageConverter (usually MappingJackson2HttpMessageConverter) streams JSON directly to the HttpServletResponse output stream. Memory growth mostly comes from building large in-memory objects like List or Map. That’s fine when the data is small, but it turns wasteful when the response grows into millions of rows or deeply nested objects. A better option is to stream JSON a piece at a time so the client begins receiving data while the server is still preparing the rest. This keeps memory steady and gives results to the client faster. To understand how this works, it helps to look at the mechanics inside Spring Boot and the tools it provides to make streaming practical and reliable.

How JSON Streaming Works in Spring Boot

Streaming in Spring Boot is built on top of the way responses are handled in the servlet model. Normally, data is collected in full before being written out, which is fine for short payloads but far less practical for large ones. JSON streaming changes that process by writing each piece to the client as soon as it’s available, while still producing valid JSON that can be parsed on the fly.

Response Building in Normal Mode

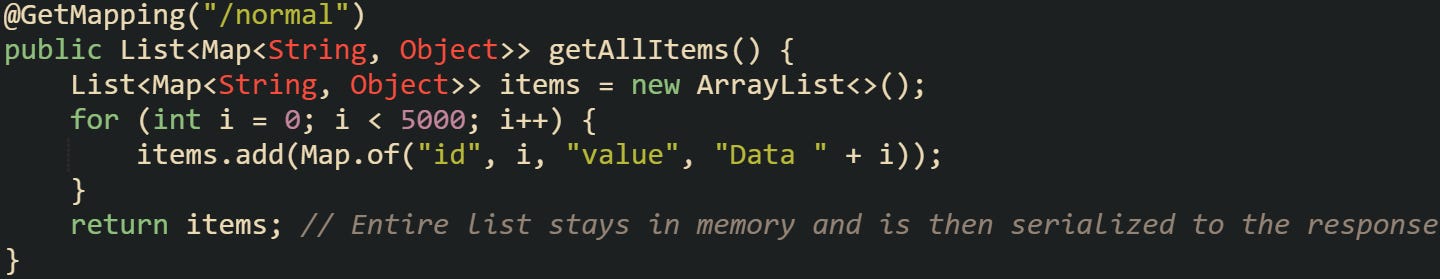

When a controller in Spring Boot returns an object, the framework hands it to a message converter, most commonly MappingJackson2HttpMessageConverter. This converter works with the Jackson library to transform the object into JSON text. The converter calls Jackson to write JSON to the response output stream as values are serialized. The servlet/container still has a small response buffer (commonly around 8 KB), and if no Content-Length is set, HTTP/1.1 falls back to chunked transfer so data can flow before the total size is known. That guarantees the client receives a well-formed JSON document. The real memory cost comes from holding the entire List in memory before it’s written out.

This code works fine until the list grows very large. At that point the full list must be held in memory, serialized, and then written in one pass. For many applications that deal with hundreds of thousands of records, this pattern becomes heavy and slow. It also means clients wait for everything to finish before they see the first byte of data.

Shifting From Buffered Output to Streaming

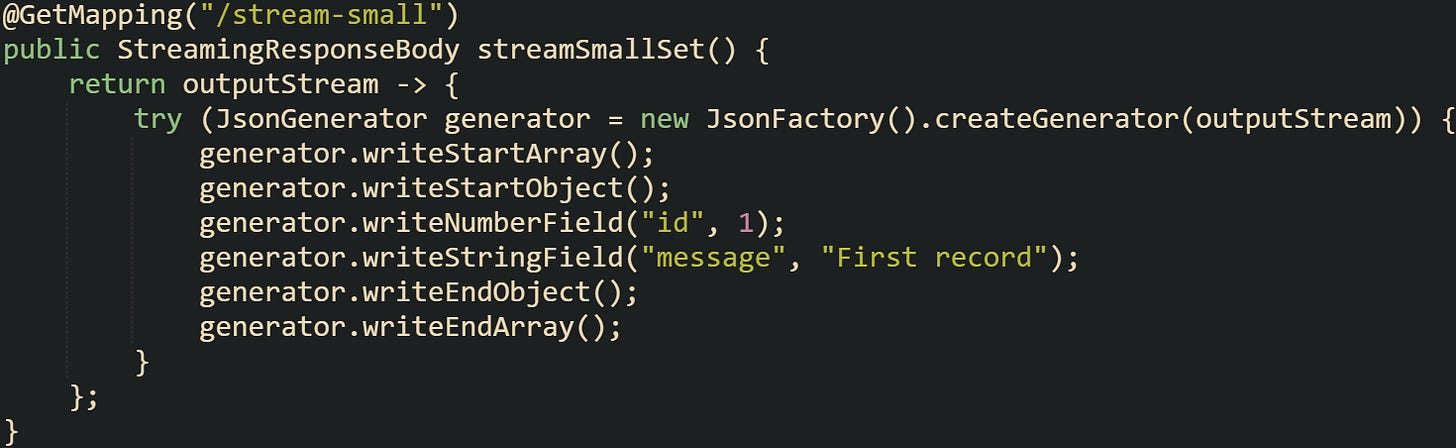

Streaming changes the flow by writing directly to the servlet response output stream. Instead of waiting for a complete object graph, JSON elements are written one by one, and the client can start receiving data almost immediately. Spring Boot makes this possible with the StreamingResponseBody type, which provides access to the raw output stream managed by the server.

This writes just a single object wrapped in an array, but the important detail is that the JSON is written directly to the response stream without waiting for serialization of a larger structure. With larger data sets, the same technique can be extended to write in a loop, flushing periodically so clients start processing results early.

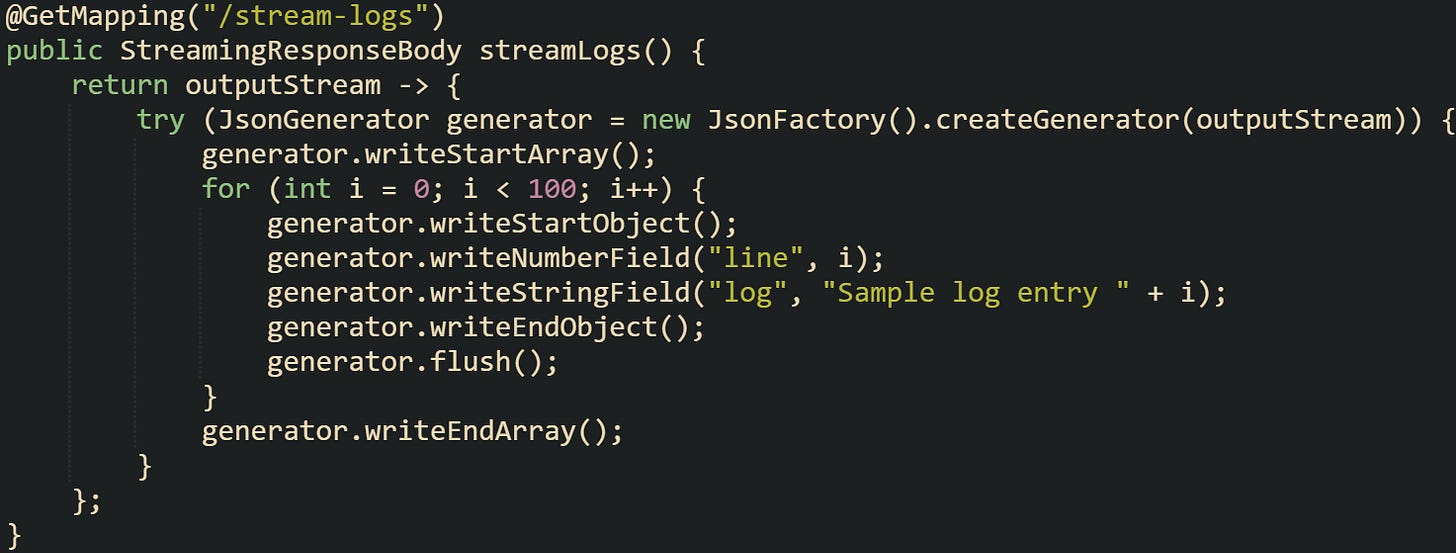

A different pattern that works well for streaming is returning log lines or event records incrementally. Instead of holding them in a list, you can generate each event on demand and send it immediately:

With this, a client application doesn’t need to wait for all 100 lines. It begins reading from the array almost as soon as the stream opens.

Chunked Transfer Encoding

On HTTP/1.1, streaming relies on Transfer-Encoding: chunked. On HTTP/2, there is no chunked transfer; the server streams data with framed DATA segments without a Content-Length. When a response uses chunked transfer, the server doesn’t have to provide a Content-Length header up front. Instead, data is broken into chunks, each prefixed with its size in bytes, and sent immediately. The client pieces them together as they arrive. This matches perfectly with JSON streaming, where each element can be flushed as soon as it’s written.

When the response size isn’t known:

HTTP/1.1 streams with

Transfer-Encoding: chunked.HTTP/2 streams with frames and the

END_STREAMflag instead of chunked encoding.

Supplying a Content-Length header disables chunked transfer on HTTP/1.1.

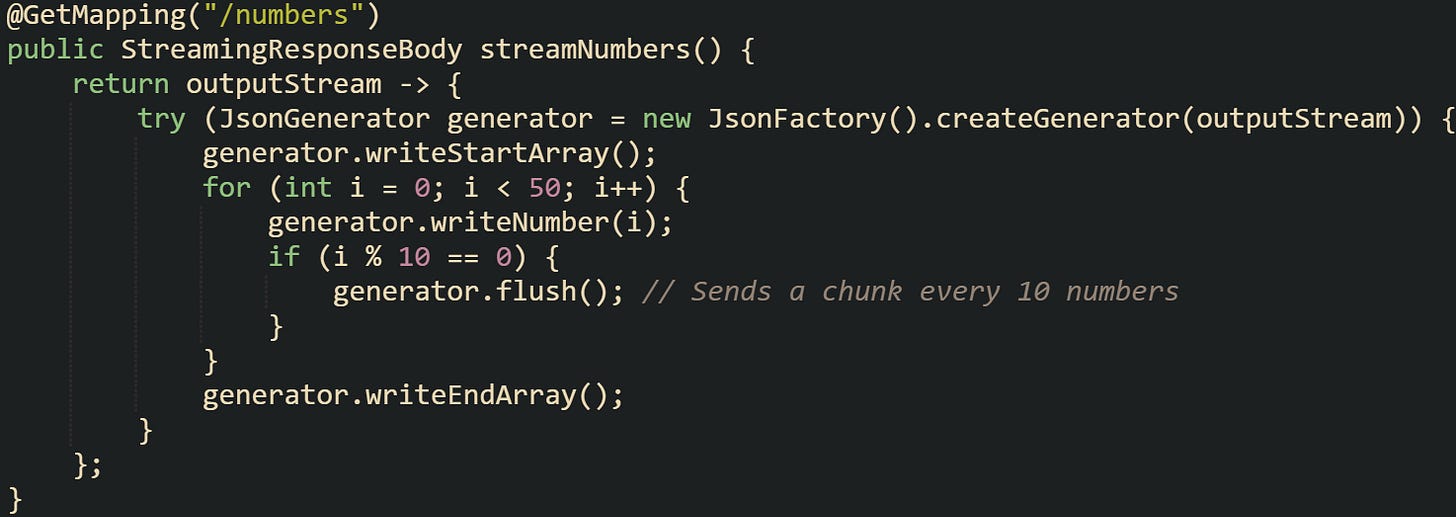

To visualize the difference, consider this code which writes a sequence of numbers in JSON without a precomputed length:

From the client side, the response arrives in a series of chunks. Each flush pushes a piece of the array across the network, allowing clients to begin parsing while the server continues writing. Large arrays that would otherwise take seconds or minutes to appear in full are instead available piece by piece.

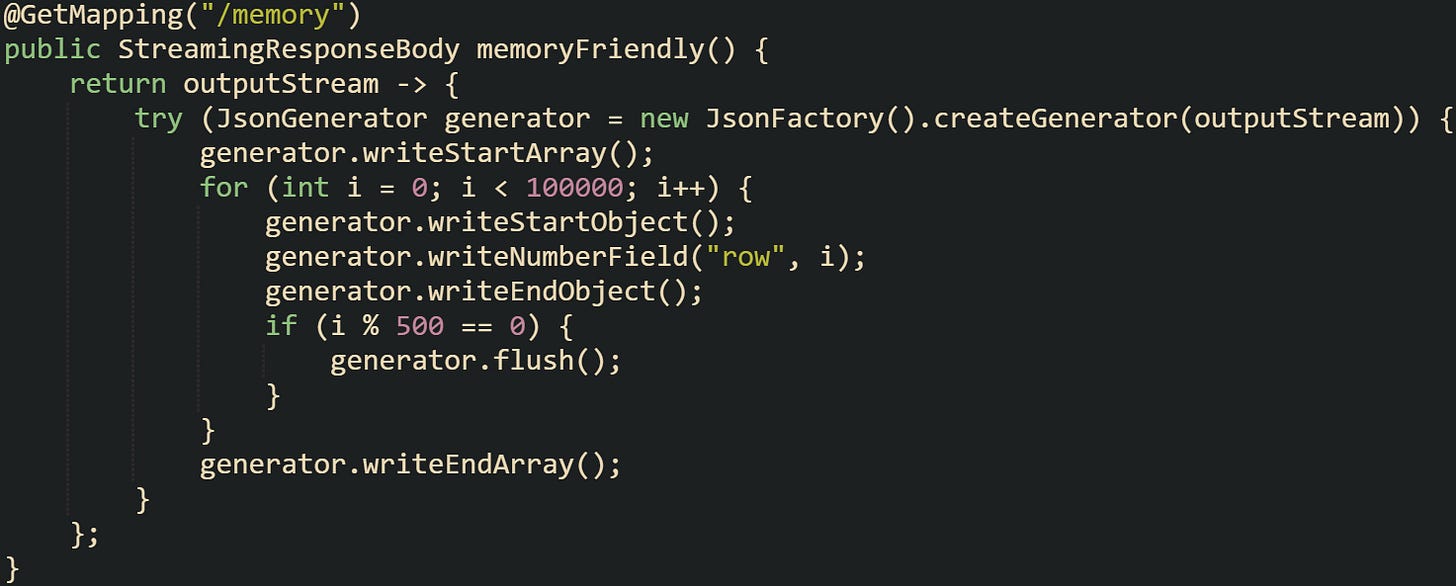

StreamingResponseBody Example

StreamingResponseBody is the most direct way to stream JSON in a Spring Boot application. It accepts a lambda or functional interface that writes bytes to the HTTP response. Inside that block, you can use Jackson’s JsonGenerator to write valid JSON tokens as they’re ready.

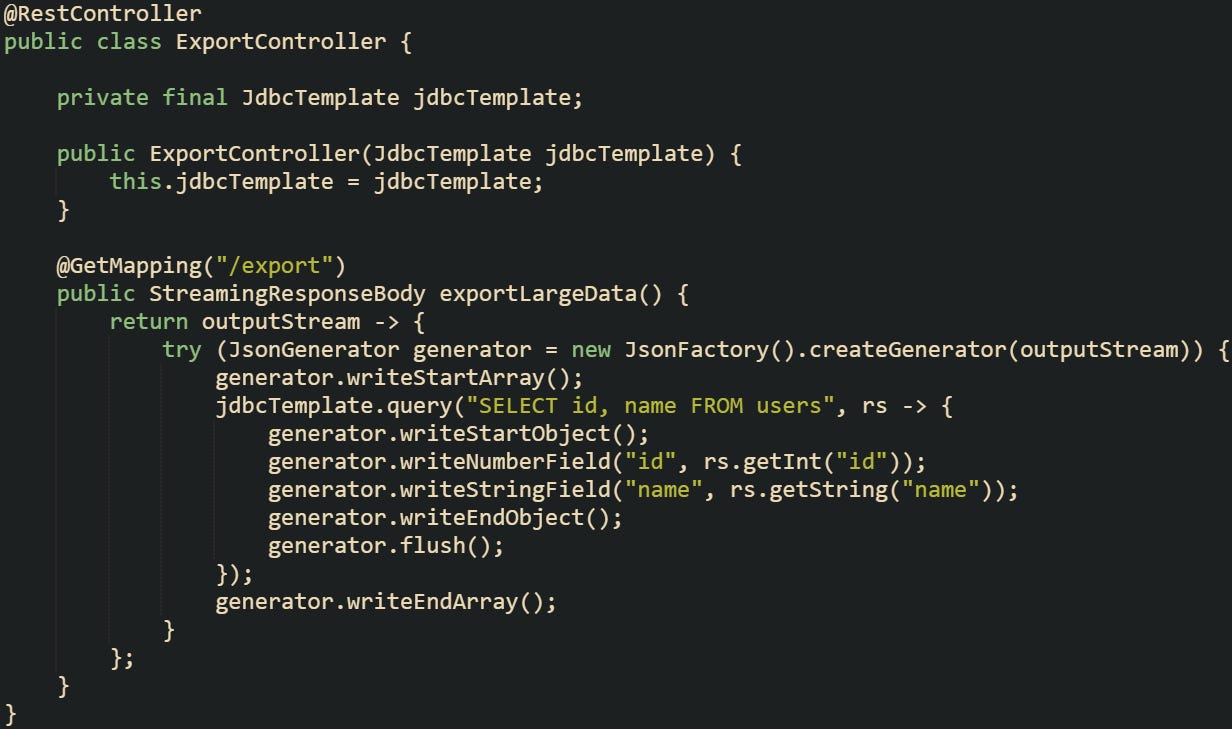

A practical example is exporting a database table with thousands of rows. Instead of fetching all rows into a list, you can stream each row from the database cursor and write it immediately:

This method ties together database iteration with JSON streaming. Each row is written out as it’s read, and the generator flushes frequently so the client receives a continuous stream of results. Memory usage stays flat, even if the table has millions of rows, because nothing accumulates in large collections on the server.

Mechanics Behind Streaming

Streaming JSON in Spring Boot is more than just writing objects out of a loop. There are lower-level mechanics at work that keep the process safe, efficient, and reliable. Jackson handles the actual JSON generation, the servlet container manages the I/O, and Spring ties the two together. Each part matters, because streaming means the server can no longer rely on building the full response first.

Integration With Jackson

Jackson is the engine behind JSON handling in Spring Boot. For normal serialization it converts an object tree into a string in one pass. For streaming, Jackson provides the JsonGenerator API, which writes JSON tokens directly to an output stream. Instead of holding a full map or list in memory, it writes objects as soon as they’re available.

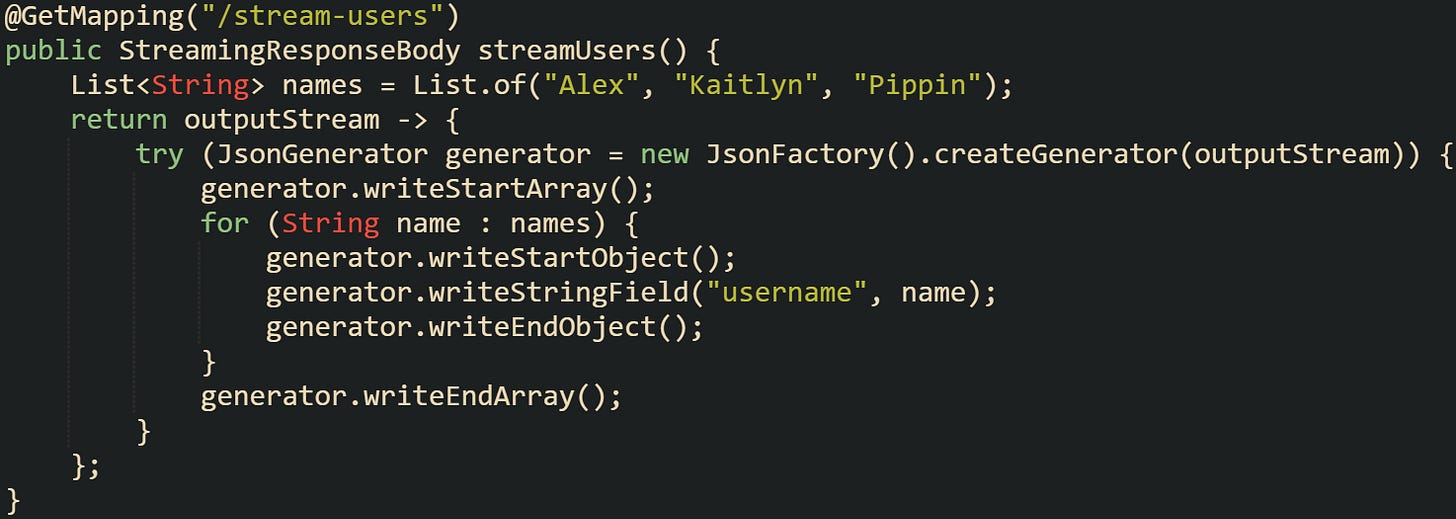

This code builds a JSON array token by token. Each call to writeStartObject, writeStringField, and writeEndObject adds structure without waiting to serialize a full collection.

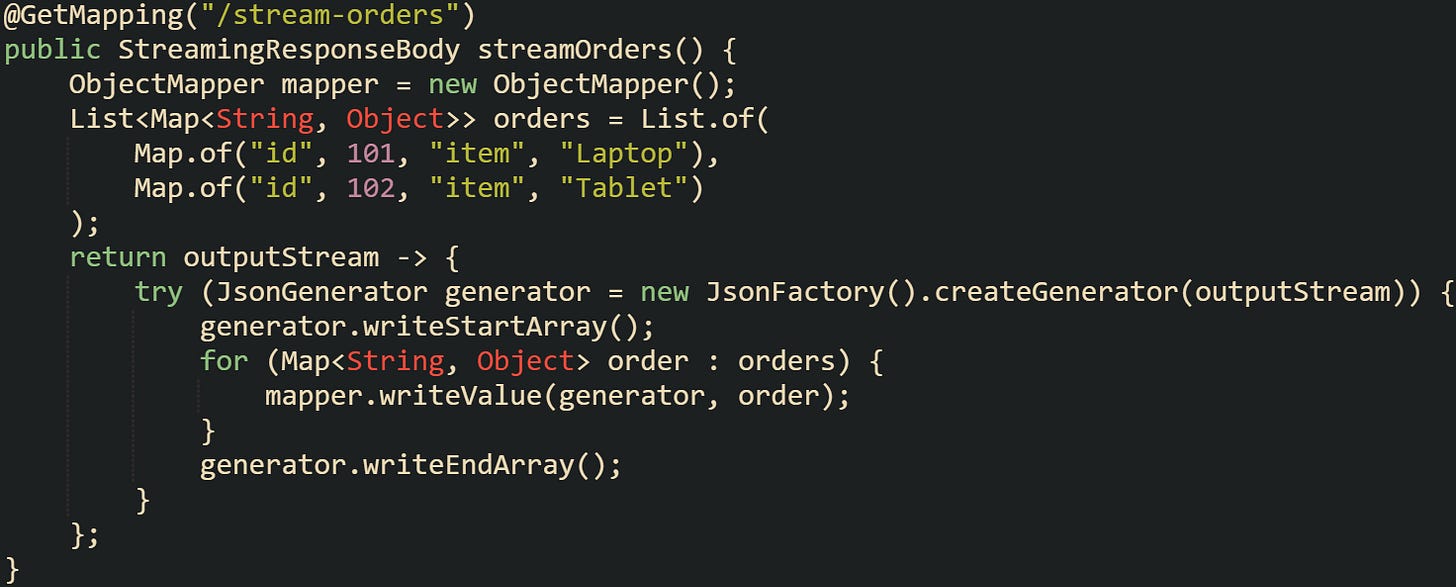

Jackson also supports streaming through ObjectMapper’s writeValue method when pointed at an output stream. This lets you serialize objects progressively without manually writing every token:

Using the mapper inside a streaming context is handy when objects are more complex and you don’t want to write every field manually.

Thread Handling in I O Flow

In classic blocking handlers the request runs on a servlet thread. When a controller returns StreamingResponseBody, Spring MVC switches to asynchronous processing and writes on a separate TaskExecutor thread while the original servlet thread is released. This keeps memory steady, but it does mean an executor thread stays busy for longer while the servlet thread is already free.

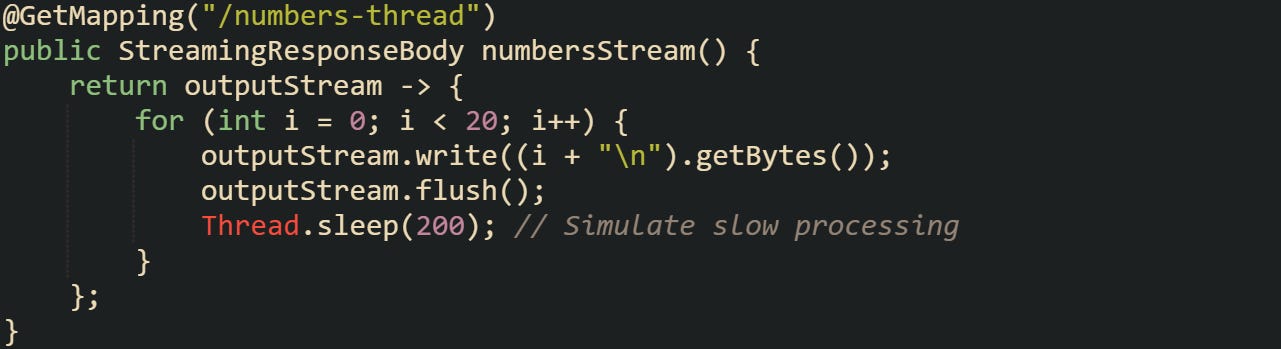

With StreamingResponseBody, the writing runs on an async executor thread, not the original request thread:

Each number is written and flushed with a pause between them. An async worker thread writes the data gradually while the original servlet thread was released earlier.

Reactive stacks, which will be covered later, don’t hold a thread in the same way. Instead, they use event loops and non-blocking I/O. But even in the servlet model, the container manages buffers so that the writing process doesn’t stall unless the client cannot keep up.

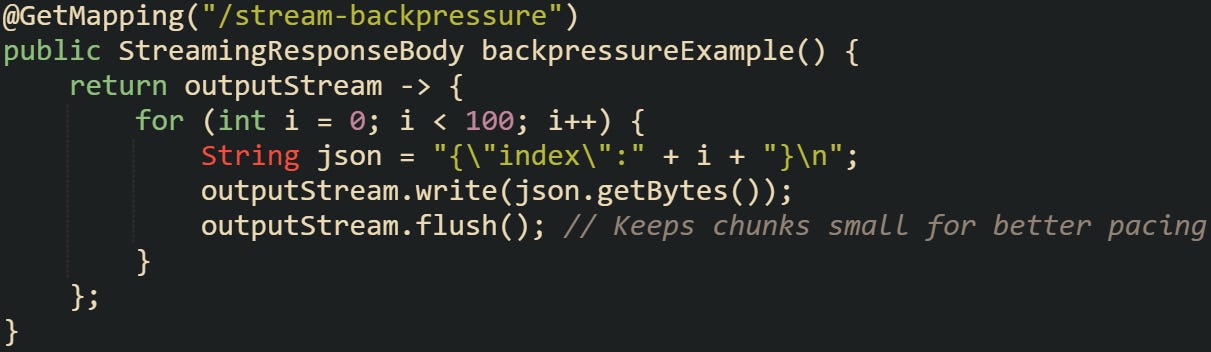

Backpressure and Client Read Speed

When data is streamed, the server must account for clients that don’t read quickly. If the client lags, the output buffer fills, and the server pauses writing until there’s room again. This natural backpressure prevents unbounded memory use on the server side. A database export is a good example of where this shows up. Each row can be written as soon as it’s read, but if the client is slow, the servlet container’s output buffer holds the data temporarily. If that buffer is full, the server blocks until the client consumes more.

Code that streams in small chunks handles this gracefully. Writing one massive string without flushes would defeat the purpose:

Clients reading line by line can start processing immediately, while the server adapts to the pace of the consumer.

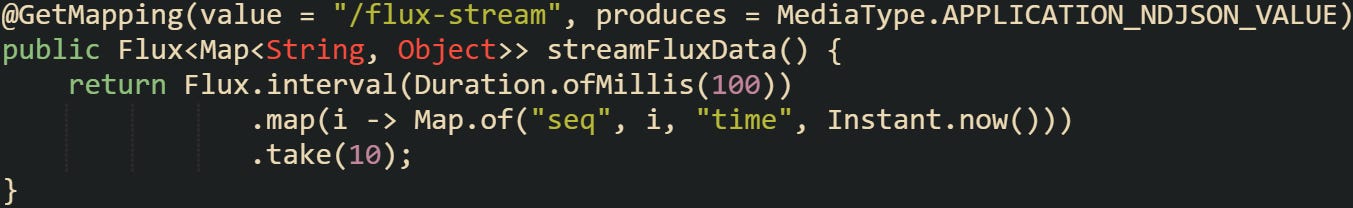

Comparing With Reactive Streaming

Spring WebFlux provides a reactive model built on Project Reactor, which offers non-blocking streaming and more control over backpressure. Instead of tying one thread to the response, WebFlux uses event loops to multiplex many streams over fewer threads.

A reactive controller can return a Flux of objects, which Spring writes out progressively:

Each element of the Flux becomes one JSON object separated by newlines. Clients start receiving data almost instantly.

Reactive streaming shines when many clients are connected simultaneously, because it avoids tying up threads for long periods. The servlet approach works well for smaller scales or existing applications, but WebFlux is designed for cases where concurrency and backpressure need tighter control.

Memory Benefits in Practice

The memory advantage of streaming comes from writing data as it’s generated instead of building it all at once. A normal buffered response with millions of records forces the server to allocate large lists and arrays, while streaming only requires enough space for the current record.

To put it in perspective, imagine a system that needs to return 500,000 rows. Serializing that into memory first could take hundreds of megabytes. Streaming the same rows keeps memory flat, because each row is serialized and flushed before the next one is read.

This method means the heap size of the application barely changes no matter how many rows are sent, because no giant collection is held in memory.

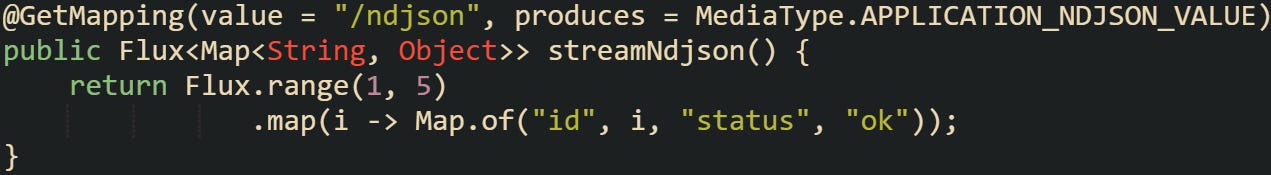

Error Handling During Streaming

One complexity with streaming is that errors can occur after part of the response has already been written. If a database connection fails or a network glitch interrupts the stream, the client may receive incomplete JSON. A normal buffered response would fail before sending anything, but streaming introduces the possibility of partial data.

To manage this, some applications choose newline-delimited JSON (NDJSON). Each object is separated by a newline, so even if the stream is cut off, the client still has valid JSON objects up to that point.

Clients reading this stream can process records one by one, and if the stream ends abruptly they still have a set of complete objects.

Close or flush the JsonGenerator and let the container manage the servlet OutputStream. Avoid closing the raw response stream yourself. That keeps the system stable, even when a client disconnects early or an exception interrupts the loop.

Conclusion

Streaming JSON in Spring Boot works by writing data directly to the response stream while the application keeps producing more. Jackson’s generator handles tokens one at a time, the servlet container manages buffering and flow control, and HTTP chunking takes care of sending each piece as it’s ready. Together these mechanics let large results move steadily from server to client without holding everything in memory, which keeps delivery efficient and allows clients to begin reading earlier.