Timers and Heap Buckets in Go Runtime

Timers in Go run behind the scenes to make features like time.After, time.NewTimer, and time.Ticker work. They give the impression of being just a simple wait followed by a signal or callback, but what actually happens is more structured. The Go runtime keeps all timers in a heap structure that lets it check for expirations quickly and keep things predictable even when thousands are active at once.

How Timers Are Organized Internally

Go’s runtime treats timers as first-class citizens in its scheduling system. Each one is stored as an object with a deadline and an action, then placed into a heap where the soonest expiration is always at the top. The heap design means the runtime doesn’t waste time scanning every entry, since it can immediately check the earliest deadline.

Creating a Timer

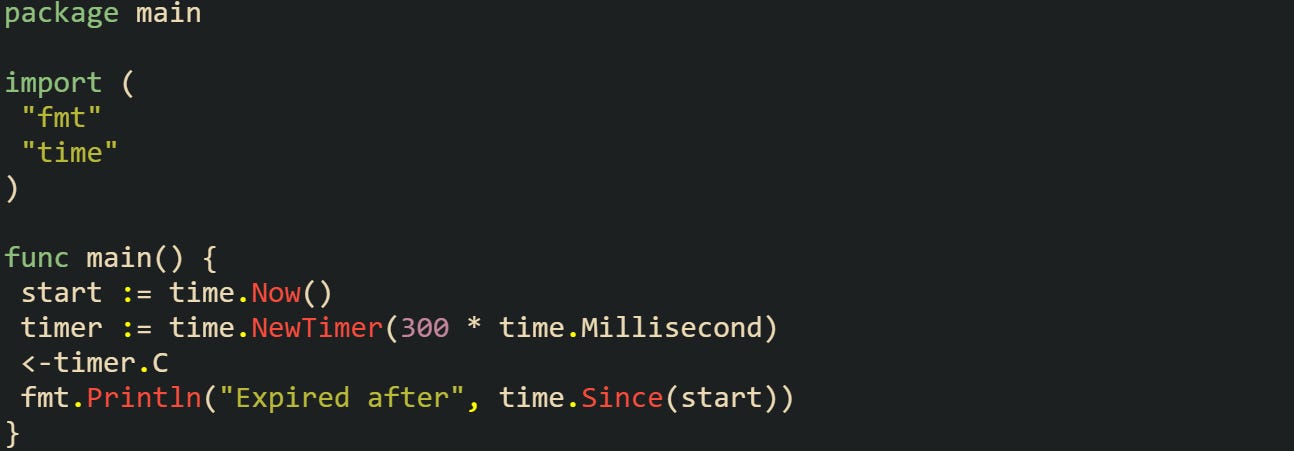

The act of creating a timer starts with the public functions in the time package like time.NewTimer or time.After. These functions don’t spin up a goroutine that sleeps. Instead, they allocate an internal runtime timer. That object stores fields such as the expiration time, a function pointer to trigger when the timer expires, and in some cases a reference to a channel. The expiration time is always expressed against the monotonic clock, which keeps timers immune from sudden jumps in the system wall clock.

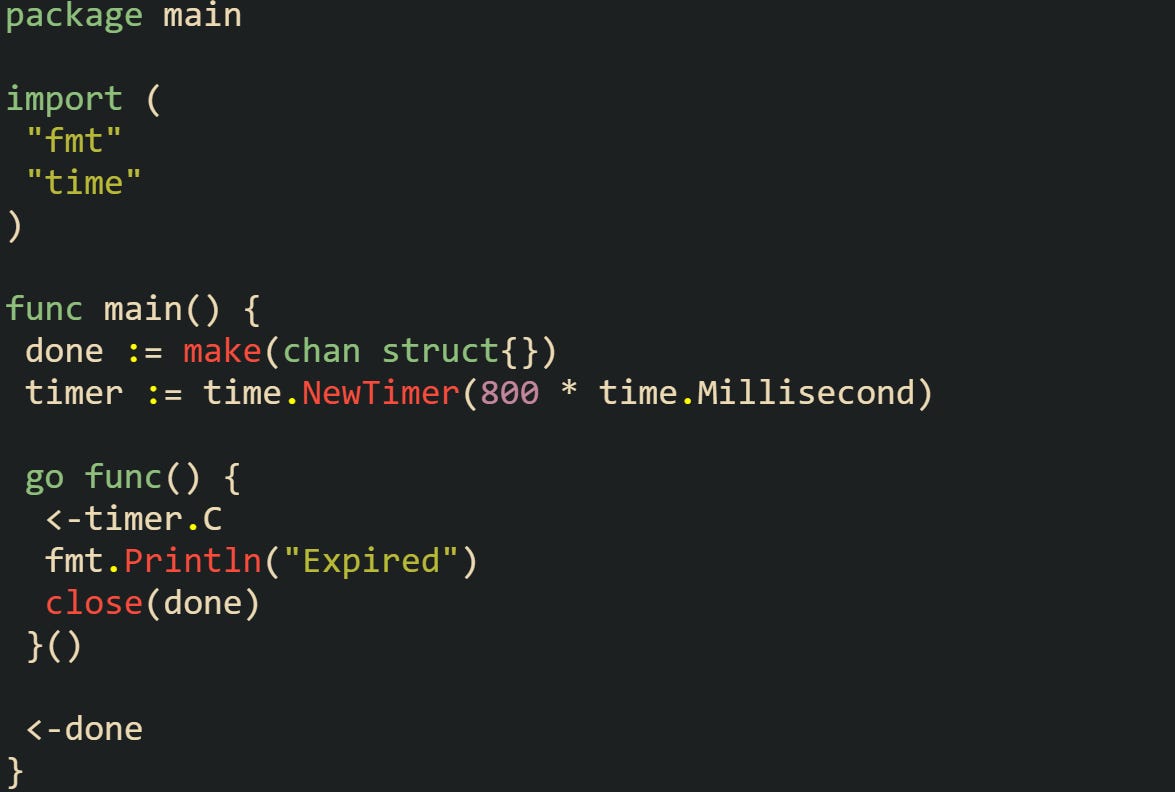

That small snippet builds a single timer with a short wait. Underneath, the runtime has pushed an entry into the processor’s timer heap with a target time equal to now plus 800ms.

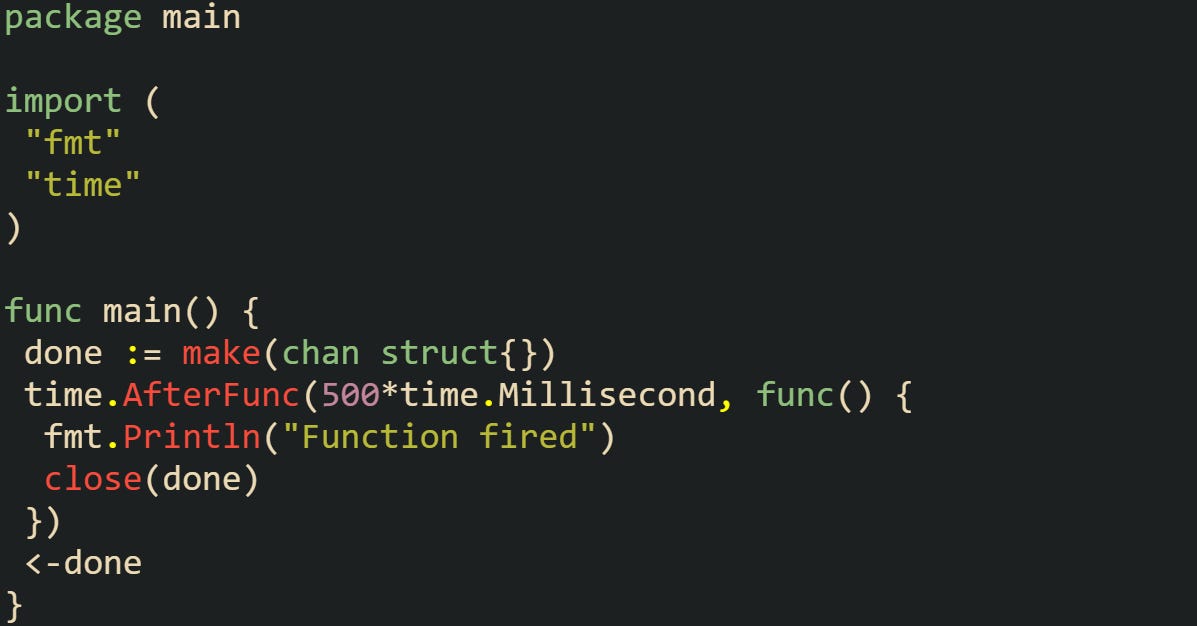

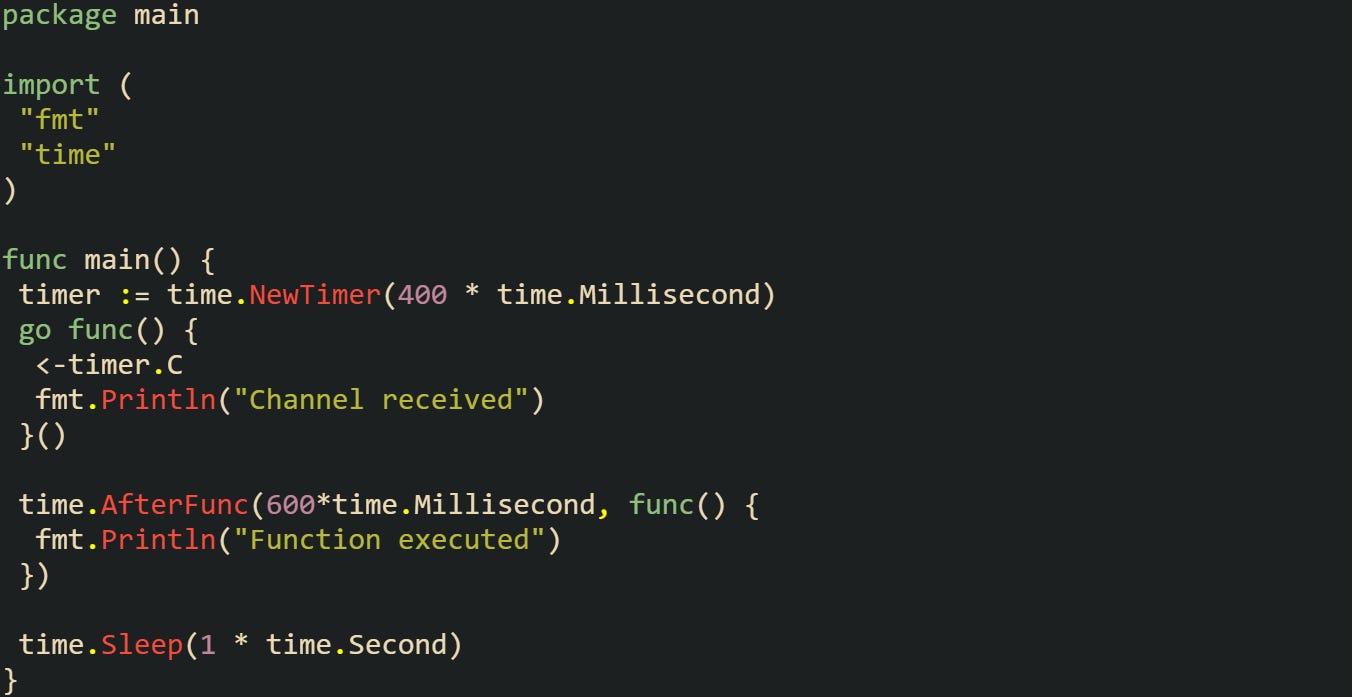

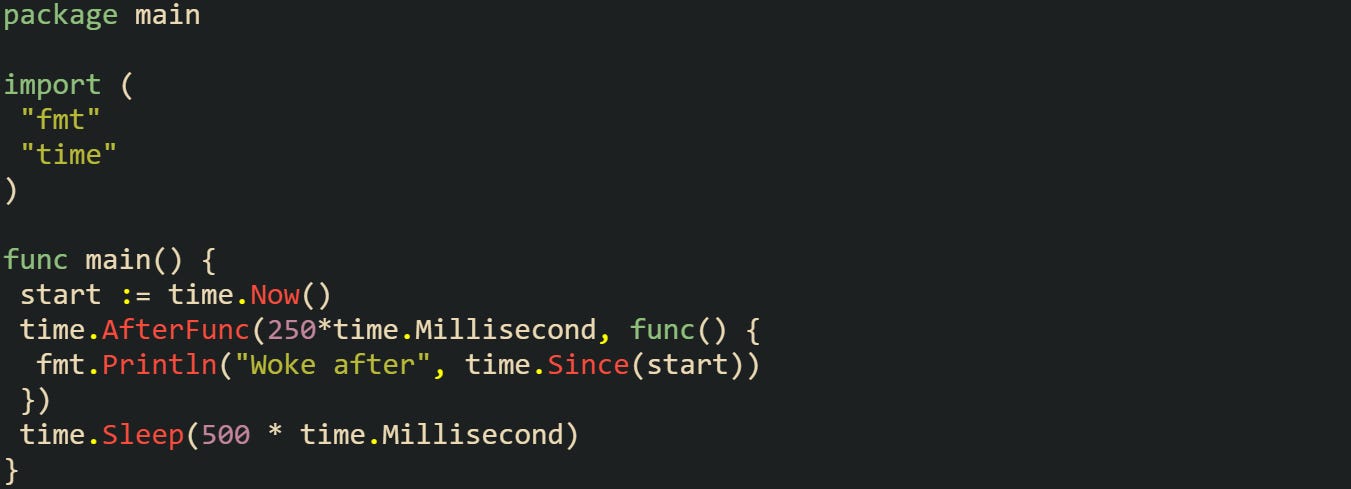

A slightly different example is time.AfterFunc, which lets you attach a function directly rather than listening on a channel. That saves channel overhead and instead queues the function itself.

The runtime creates the same timer structure but fills the function pointer instead of a channel reference. Both styles of creation end with the timer being pushed into the heap of the processor that scheduled the call.

Heap Insertion

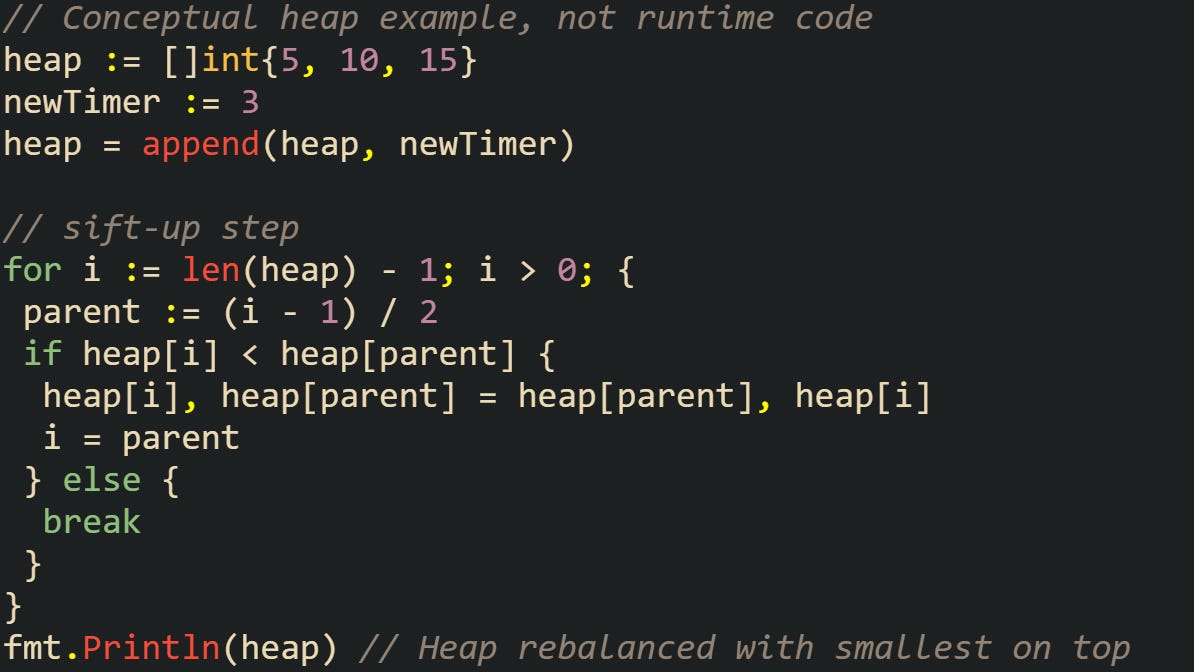

Once a timer is created, it needs to be placed where the runtime can track it. That’s handled by the heap insertion step. The heap is stored as a slice in memory but treated like a binary heap, where the smallest value always bubbles to the top. The smallest value in this case is the expiration timestamp. When a new timer is inserted, the runtime appends it to the end of the slice, then performs a sift-up process to restore the ordering. If the new timer expires sooner than its parent, the two swap places. This continues until the new entry is no longer smaller than its parent or it reaches the top. The process is efficient because it only climbs log(n) steps in the worst case.

This isn’t Go’s actual runtime code but helps visulize the logic of a sift-up process. The actual runtime handles more fields and also manages whether a timer is active or deleted. But the principle is the same: by arranging timers in a heap, the runtime never needs to scan every timer to find the next one to expire.

Heap ordering also has implications when timers are reset. Resetting a timer adjusts its deadline and can move it within the heap to keep ordering valid. In practice, reusing a timer with Reset avoids a fresh allocation and is commonly preferred to creating a new one.

Buckets Per Processor

Go’s runtime uses logical processors (the P structures inside the scheduler) to spread goroutines across threads. Each P maintains its own timer heap, rather than shoving every timer into one global structure. This design avoids a global lock that would otherwise become a choke point as more goroutines use timers.

When a goroutine creates a timer, it’s generally attached to the heap of the processor that the goroutine is running on. That way, the scheduler thread responsible for that processor can manage the timer without needing to coordinate with all the others.

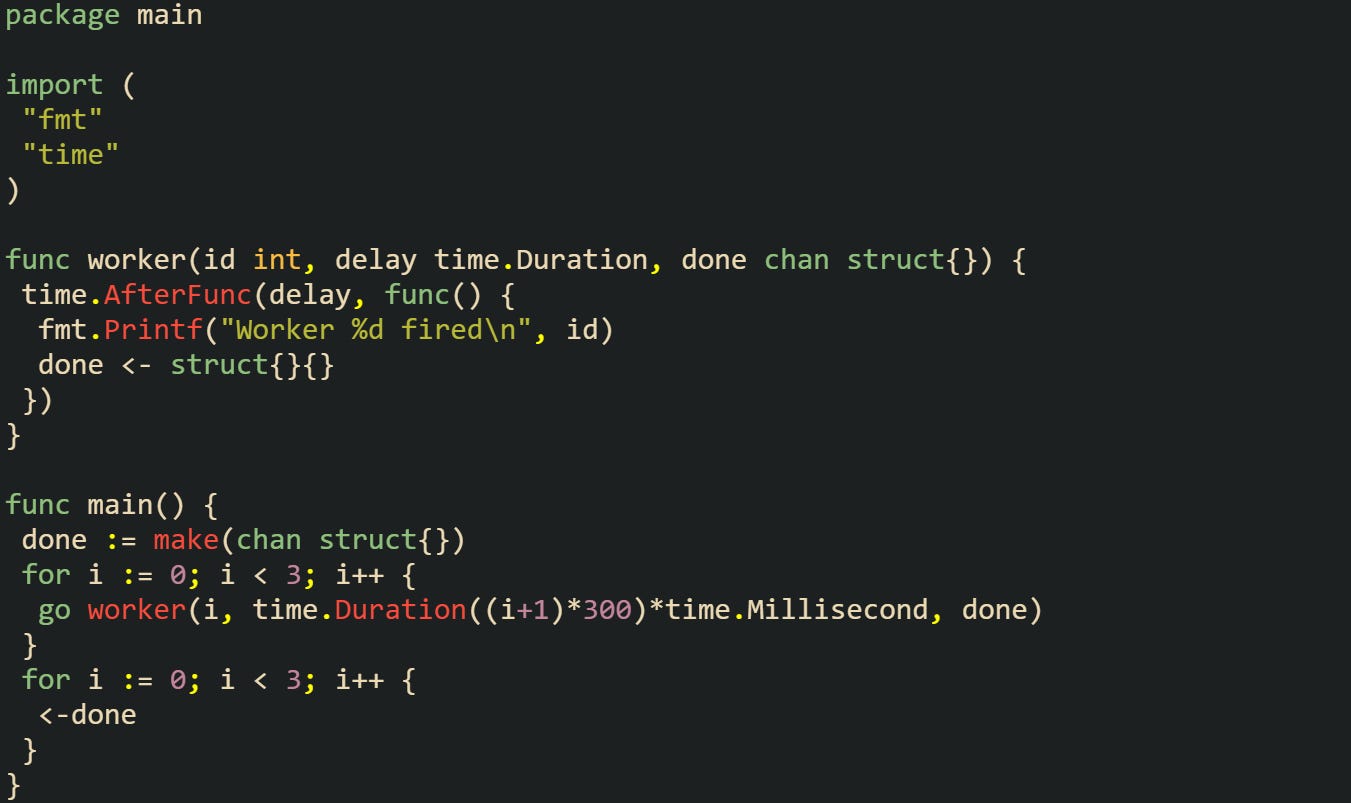

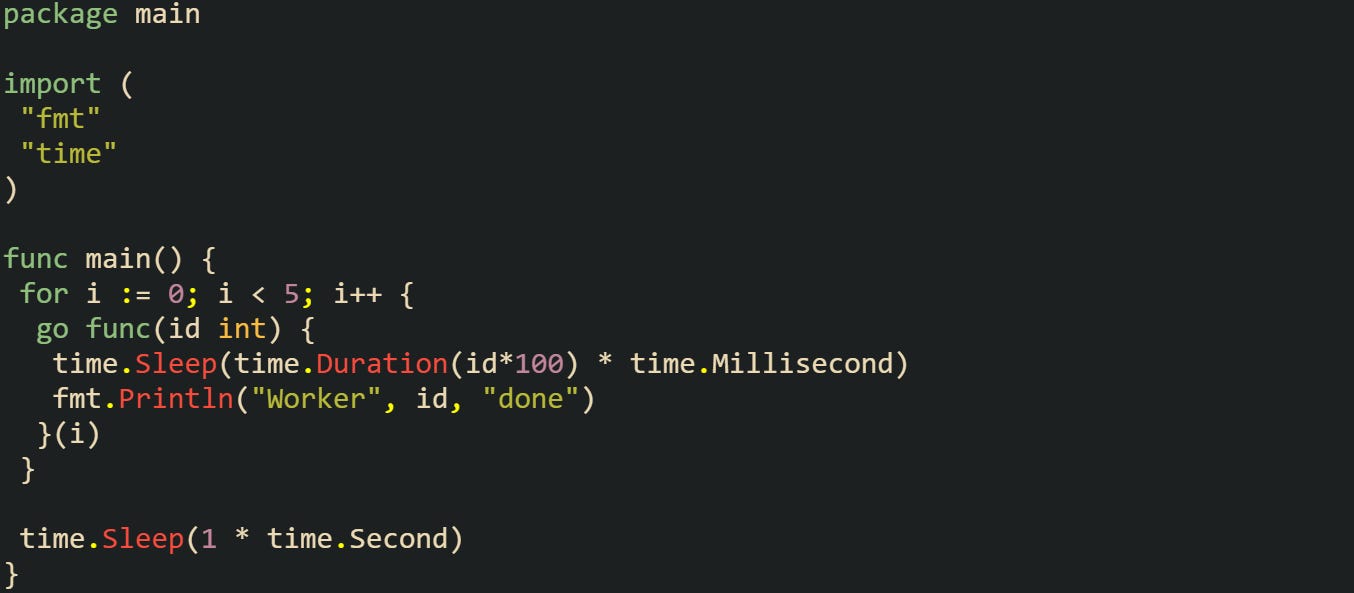

Each worker here spawns its own timer with different delays. Internally, those timers are likely distributed across the processors managing those goroutines. No single heap has to carry the full weight of all timers.

Buckets per processor also allow timer checks to scale with the number of processors. Each P only needs to scan its own heap, reducing contention. That doesn’t stop the runtime from moving timers around when necessary, but the default case spreads the workload naturally.

How Timers Are Checked and Fired

Creating timers and placing them on heaps is only the first step. What makes them useful is the process of monitoring those heaps, detecting expirations at the right time, and then delivering results back to goroutines. The runtime ties this directly to scheduling, so checks are lightweight and happen in rhythm with other runtime activities.

Expiry Checking

The scheduler doesn’t wake up and sweep through all timers on every cycle. Instead, it looks at the top of each heap. Because the heap property guarantees that the smallest deadline is always there, the check is quick. If the earliest deadline hasn’t passed, no further timers can have expired, and the runtime moves on without doing extra work.

When the top entry has expired, the runtime removes it and marks it ready. That may trigger a goroutine waiting on a channel or a function scheduled with time.AfterFunc. The cycle repeats until the top entry is in the future. This structure keeps the cost of checks low even when there are thousands of active timers.

This code waits on a single timer. Inside the runtime, the scheduler checks the processor’s heap and fires when the top entry passes the deadline. That single check is the same pattern applied across heaps with many timers. Some timers are set far into the future, and those don’t add overhead during regular expiry checks. The runtime only compares the top entry, so the presence of distant timers doesn’t slow anything down.

Firing Behavior

When a timer expires, the runtime has two common actions. For channel-based timers like time.NewTimer or time.After, it sends a value on the channel. For function-based timers like time.AfterFunc, it queues the function to run as a new goroutine. Either way, the event is delivered back into ordinary Go scheduling.

Two styles of firing happen here with one through a channel, and one through a direct function. The runtime doesn’t treat these as separate systems. Both are driven from the same heap entries, with different callback targets attached.

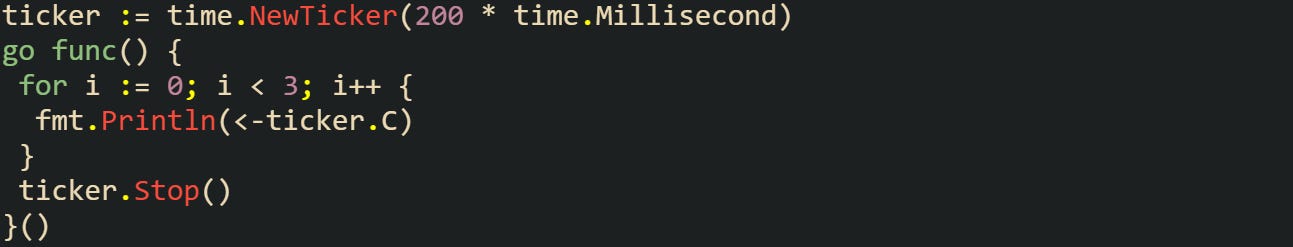

Repeating timers such as those created by time.NewTicker behave a little differently. After firing, they don’t disappear. The runtime recalculates the next deadline and places them back into the heap. This rescheduling step lets tickers continue indefinitely until stopped.

This ticker is reinserted into the heap with each new cycle, keeping the sequence going. It doesn’t mean a new timer is created each time. The runtime updates the existing one and restores the heap property.

Timer Stealing

Because timers are tied to processor-local heaps, uneven workloads can appear. One processor may hold many timers while another is almost idle. To prevent lag in these situations, the runtime uses timer stealing. When a processor has little to do, it can scan another processor’s timer heap and run ready timers there, which can make goroutines ready that it then steals. This balances the load so that timers don’t get stuck waiting behind too much local activity. Stealing is not the normal path but rather a correction mechanism for imbalance.

A quick experiment with goroutines and timers helps illustrate the idea of uneven distribution.

Each goroutine here schedules work with different delays. Depending on processor scheduling, timers for some goroutines might end up grouped together. If one processor is heavy with timers and another is light, the runtime can balance them with stealing, which keeps latency predictable across all goroutines.

Handling Sleep and Wakeup

Timers also guide when the runtime decides to sleep threads during idle periods. If no goroutine has immediate work, the scheduler asks how long it can pause before something important happens. The top timer deadline provides that answer. When the next expiry is far away, the runtime tells the operating system it can sleep until that deadline or until an external event interrupts it. If the expiry is near, the runtime schedules a shorter sleep so it can wake in time to fire the timer.

This timer fires while the main goroutine is asleep. Under the surface, the runtime aligns its thread wakeups with the timer deadline so the event is delivered right on schedule.

Sleep integration is important for energy and resource use. Instead of polling constantly, the runtime relies on the earliest timer deadline to decide the exact point where it must wake again. That keeps both responsiveness and efficiency in balance.

Conclusion

Go’s runtime treats timers as part of the scheduling engine itself rather than simple delays. They’re arranged in processor-local heaps, checked through quick comparisons against the nearest deadline, and fired through either channels or function calls. Rescheduling for tickers, balancing through timer stealing, and aligning thread sleep with expiry times all come together to keep timers efficient and predictable even under heavy load.